An introspection on Chatbots, AI & The Future of Privacy

published: (updated: )

by Harshvardhan J. Pandit

article privacy

Chatbots, AI & The Future of Privacy by Stefan Kojouharov

https://chatbotslife.com/chatbots-ai-the-future-of-privacy-174edfc2eb98

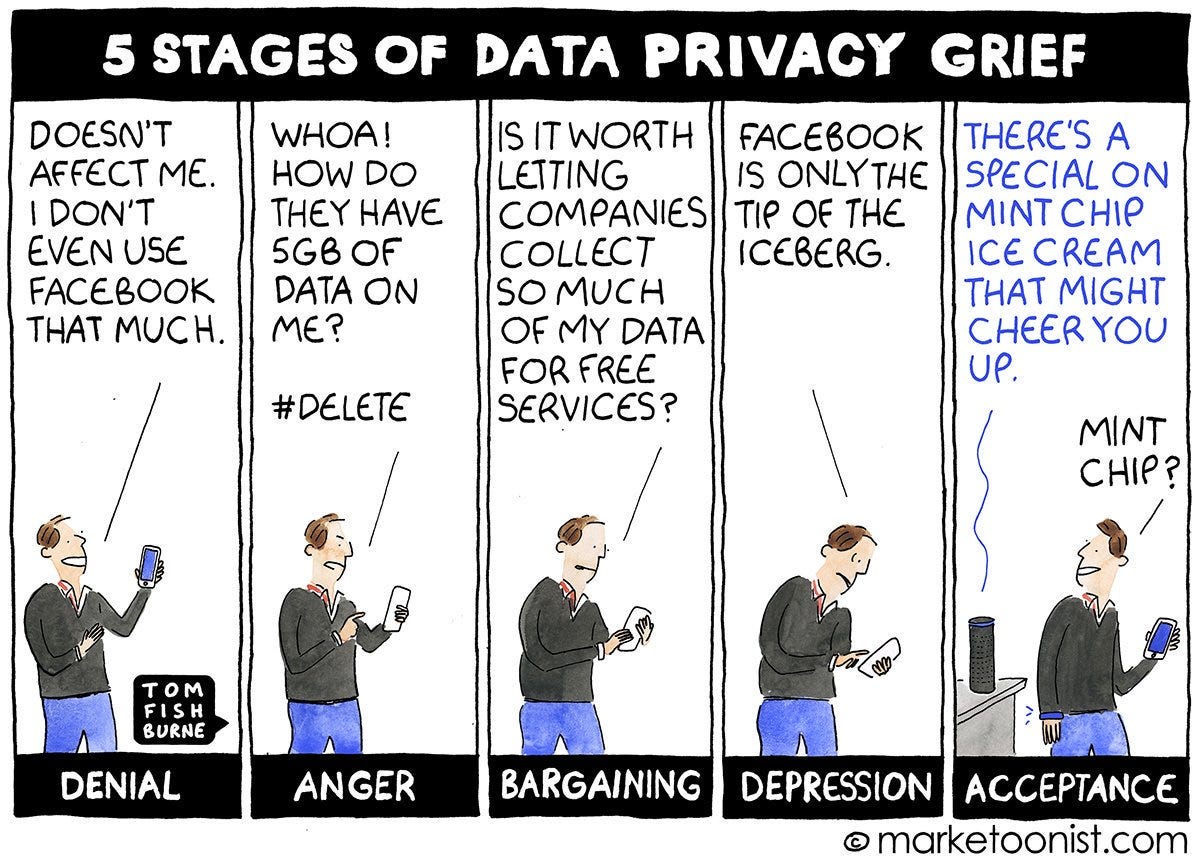

This post is a collection of thoughts I have/had when reading the article aforemention above. I came across the article originally on Twitter, when I was mentioned in a retweet by a close friend. This warranted a thorough read, though perhaps I suspect that it was only the image that I was meant to look at. Regardless, I ended up reading much more than I had intended to, and decided to type down my thoughts as I go along. The rest of this post is essentially a rambling of my thoughts as I read through the article.

Data pods

One of SEED’s inherent strengths is its ability to manage security and privacy over the SEED network.

Put in simple terms, people will have the power to control the data they share with companies (instead of the limited or lack of control they have today).

It looks like an glaring omission when the article so succintly mentions the project and its intended goal, but fails to mention Tim Berner Lee's ambitious SOLID project.

GDPR

Part of the reason we are creating SEED is to give users some control over their data: who has access to what kinds of their data.

...

For example, Facebook has a profile on me, thanks to the interactions of other people on Facebook, even though I’ve never created an account with Facebook. And, I can’t do anything about that data.

Okay, I get it, you are an American company. But the truth is that the GDPR is and will continue to have a large impact on how organisations have transparency regarding their collection and usage of personal data. To not mention it in the article is just plan bad.

Stefan: What are the most important questions we should be asking about privacy and our data?

Nathan: This is very context dependent.

Who is collecting data on you?

Do you know what kind of data they are collecting?

Do you know what they are doing with the data?

Again, the GDPR not only empowers you to know the answers to these questions, as your right, but it also provides an obligation for companies to provide this data to you. This should be mentioned more, everywhere.

Stefan: In the EU, citizens own their own data and can request companies to delete it from their servers. Is this an option that Seed will provide to users?

Nathan: Certainly. This is something we have to do because of GDPR. Fortunately, the largest companies are already preparing for this and laying the framework.

We will certainly support GDPR-level privacy controls for European citizens and there is no reason why we shouldn’t extend this level of control to all users. However, there might not be facilitation on the other side. For example, some US companies might not have the API to delete a user’s data. Lastly, it is fully within a company’s rights to deny service if they do not get sufficient data.

Will these apps that are not compatible with the GDPR not be able to access the user's data then?

Metadata is the real data

It’s one thing that Alexa records everything you say, it is another when they process that data, track your location, and create meta-data on top of it.

This hits the practice right in the middle of it. Simply having loads of data is never enough, it is always about what kind of metadata is attached to it that provides a lot of context and gives it its real value. Unfortunately, while some of the data is known, almost none of the metadata practices are known out in the open today. Even with laws like the GDPR, getting metadata outside of these ecosystems or even just knowing about them is not possible. Hopefully, this streamlines in the future before it is too late to stop this invading force over privacy.

Possibilities stretch in both directions

For example, it’s possible now to diagnose some forms of mental illness with a decent degree of accuracy on under a minute of recorded audio. That’s the kind of thing that can be done with the data that these companies collect. Most people don’t even know that is possible, let alone that it may be happening.

Every time someone makes this argument, that having data can lead to better things, I am tempted to point out that they can also just as easily be misused by almost anybody. Case in point - Uber's manipulation of its drivers. The same data could have been used to provide the drivers better harmonic growth as well as control over how much they want to work. Instead, it ended up being purportedly used (or looked into) for insidious purposes for the sake of corporate profit. So any time someone says, giving this data may one day save your life, I would like to point out - not before filling the pockets of many, and with an indirect harm to me, either via loss of value or invasion of privacy.

Always recording

We are being recorded in audio and it is common to see Google automatically suggest a search term you were just discussing with a friend or to see advertisements follow you online as the result of an obscure comment you made in a conversation with a friend.

I cannot believe someone speaking authoritatively on this topic just accepted this as a fact and continued as if this was the norm. Here are a few articles that demonstrate what actually we can expect from these always-listening devices (tldr; not much, they are bizzare, but not the norm, and should not just be accepted as standard practice like cookies) - Hey, Alexa, What Can You Hear? And What Will You Do With It? , Is Alexa Listening? Amazon Echo Sent Out Recording of Couple’s Conversation , Some apps were listening to you through the smartphone’s mic to track your TV viewing, says report , That Game on Your Phone May Be Tracking What You’re Watching on TV

Choice?

In fact, we can argue that people have a responsibility to share their data so that, in the aggregate, it can be used to inform important research — like into medical research. What they care about is specific data being shared in a personal way — that’s the creepy betrayal of their trust.

Do we have a social responsibility to share our data? Like for medical research? The person who said the above certainly seems to think so. The actual question is, do we have a choice? Can we choose what is acceptable and what is not acceptable? Hospitals who have the data can use it safely because a) they are hospitals and they generated that data, though still tied to patients as individuals, and b) they are heavily regulated regarding how they use that data. If I have a health app, does the app take it upon itself to 'share my data for social responsibility'?

Psychological Influence

Stefan: In a recent scientific experiment, scientists were able to influence what choice the subject made using electrodes. The subjects thought they were making the choice when, in fact, it was the scientists. How will AI/bots influence free will?

One specific answer - marketing and advertising research. Period.

Using psychology has perhaps seen its greatest effect in marketing and advertising, and one should expect the same to bleed into anything that will come out in the future regarding user interaction and behaviour.

We have never had as much free will as we’ve believed but, now, there is a risk that this will be reduced even further.

A statement that can be painted as either true or false depending on which use-cases one might pick. Therefore, dangerous to use in articles. People usually assume the context in their head, say a statement as a fact, but fail to attach it to the context they have in their head. It is very important to attach what exactly they mean when talking about sensitive things, otherwise it risks the chance for misinterpretation. For example, if I consider that online behaviour is increasingly becoming more nefarious with less user control and more centralisation, then the statement hold true. On the other hand, if I bring in recent laws and practices that demand more transparency, than say two years ago, then that statement can be argued to be false.

Stefan: In the near future, bots & AI will do A/B tests on an individual level. What happens when it gets to a point where it is no longer looking at the aggregate? What happens when A/B tests are done a person’s biases, values, beliefs, motivations, decision making, etc?

We already are seeing this, in some measure, when people are re-enforcing their biases through the filter bubble effect. Algorithms inherently feed into biases whether we program them to or not. This is the real challenge of tomorrow's AI.

Control?

Stefan: So, when the Bank of America bot collects user information this won’t be shared with the SEED platform? What if Bank of America shares their user data with third parties?

Nathan: That is correct. The data does not pass through us or come to our network. As far as Bank of America sharing their data with third parties, that will be a part of their terms of use and disclosed on the front end. They will need to disclose who they are sharing it with and they have to live up to their licensing agreement. Otherwise, they can be taken off the network.

Will the user's get this gatekeeper like control or will the SEED platform do the 'policing' in their user's interests?

Conclusion

In conclusion, I was not impressed by the article, nor did I find it to be particularly unfair to the topic. I have the (dis-)advantage of researching this area, so my views are more picky about some of the things said. Over all, though, I think the article makes some fair points, but fails to elucidate how this should translate into better things. It is stuck somewhere between being a vision and debating its activities.