AIUP: an ODRL Profile for Expressing AI Use Policies to Support the EU AI Act

International Conference on Semantic Systems (SEMANTiCS)

✍ Delaram Golpayegani* , Beatriz Esteves , Harshvardhan J. Pandit , Dave Lewis

Description: Expressing AI Use Policies for the AI Act as an extension/profile of the W3C ODRL standard.

published version 🔓open-access archives: harshp.com

📦resources: repo , poster

AbstractThe upcoming EU AI Act requires providers of high-risk AI systems to define and communicate the system's intended purpose -- a key and complex concept upon which many of the Act's obligations rely. To assist with expressing the intended purposes and uses, along with precluded uses as regulated by the AI Act, we extend the Open Digital Rights Language (ODRL) with a profile to express the AI Use Policy (AIUP). This open approach to declaring use policies enables explicit and transparent expression of the conditions under which an AI system can be used, benefiting AI application markets beyond the immediate needs of high-risk AI compliance in the EU. AIUP is available online at https://w3id.org/aiup under the CC-BY-4.0 license.

AI Act ,ODRL ,AI use policy ,AI risk management ,regulatory enforcement ,trustworthy AI

Introduction

Within the EU AI Act [1] there is a strong emphasis on intended purpose – a legal term-of-art described as the use of the system specified by the provider, which should include information regarding context and conditions of use (AI Act, Art. 3). Given its importance in assessment of risk level under the Act [2], and in turn in ensuring safe and trustworthy use of AI, intended purpose of an AI system should be communicated to its deployers in a transparent manner. In this paper, we aim to simplify the specification of this key concept by adopting a policy-based approach. As such, we propose to extend the W3C’s recommendation on Open Digital Rights Language (ODRL)1 to fulfil the representation of intended purpose through an AI Use Policy (AIUP) profile. AIUP serves as a mechanism for expressing AI intended and precluded uses as well as conditions of use by modelling them as permissions, prohibitions, and duties within a policy.

Related Work

ODRL has been leveraged for legal compliance and policy enforcement, particularly in EU GDPR compliance tasks such as automated checking of consent permissions [3], expressing legal obligations [4], and modelling the obligations in terms of permissions and prohibitions regarding executing business processes [5]. In the context of data governance, ODRL was extended for expressing policies related to access control over data stored in Solid Pods [3], utilised for modelling policies associated with responsible use of genomics data [6], and used in expressing data spaces’ usage and access control policies [7], [8].

AIUP

AIUP Requirements

AIUP is intended to be used by AI providers and deployers to communicate and negotiate the conditions under which an AI system can/cannot be used. The competency questions, which shape the requirements of the policy profile, are extracted from the AI Act and listed in the following:

CQ1. What is the intended use(s) of the AI system? (Art. 13 and Annex IV(1a))

CQ2. What is the precluded use(s)2 of the AI system? (Recital 72)

CQ3. To use the system as intended, what human oversight measure(s) should be implemented by the deployer? (Art. 14 (3)(b))

CQ4. What is the reporting obligation(s) of the deployer? (Art. 26(5))

To express intended and precluded uses, we utilise the 5 concepts

identified in our previous work [9] that are domain,

purpose, AI capability,

AI deployer, and AI subject. To further

capture the context of use, we also include

locality of use.

AIUP Overview

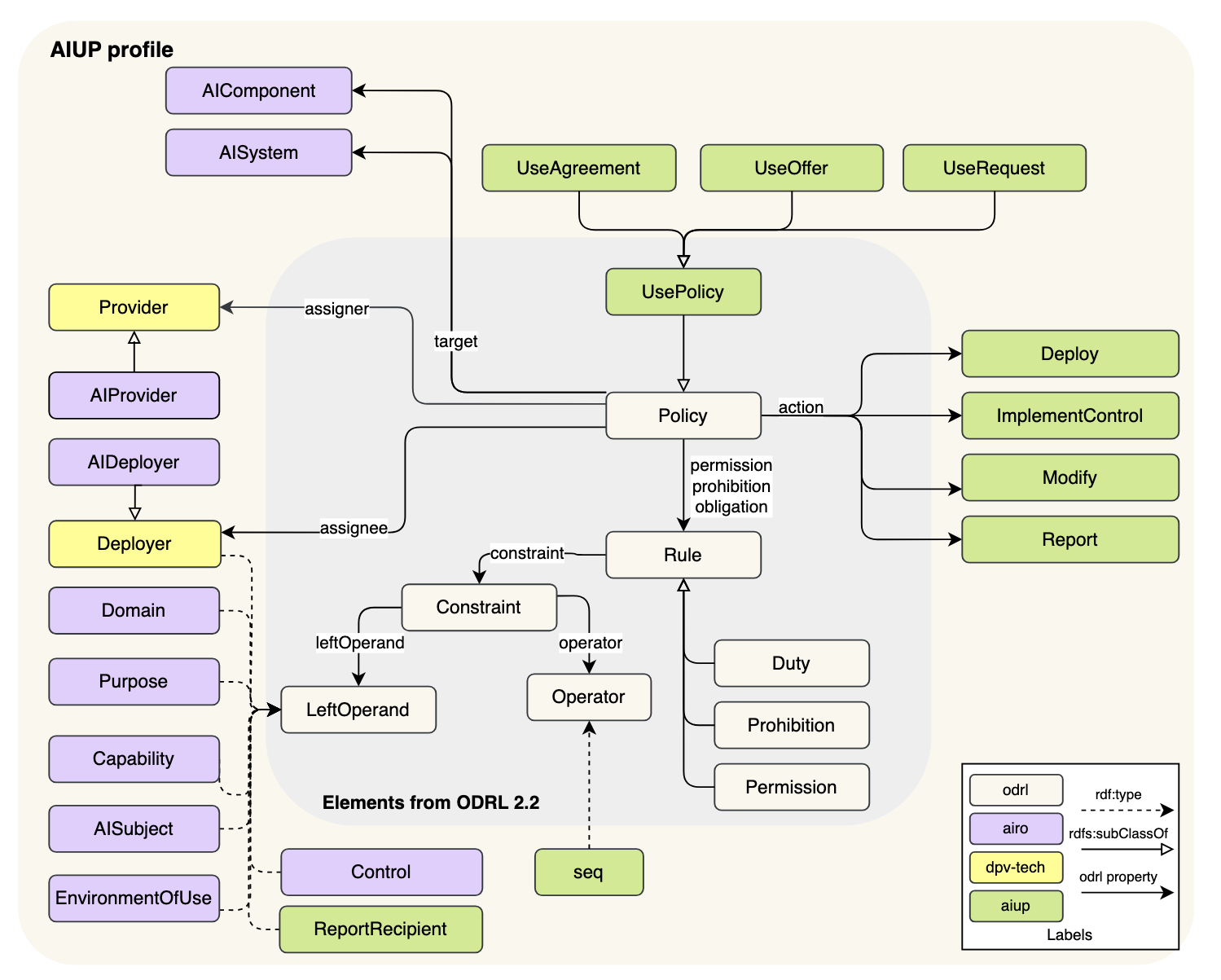

An overview of the AIUP’s profile is illustrated in Figure 1. Expressing intended and

precluded uses of an AI system or component within a policy are enabled

by employing odrl:permission and

odrl:prohibition rules respectively. For expressing the

conditions of use, i.e., obligations that should be fulfilled by a party

in order to use a system or component, the odrl:duty

property should be employed. The vocabulary used in AIUP is defined in

alignment with the AI Risk Ontology (AIRO) [10] and the Data Privacy Vocabulary

(DPV) [11]. The

development follows the ODRL V2.2 Profile Best Practices3,

which requires the terms to be defined in the policy namespace (in this

case aiup) with skos:exactMatch to link the proposed terms

to existing vocabularies.

AIUP introduces 3 types of aiup:UsePolicy, that are

aiup:UseOffer, aiup:UseRequest, and

aiup:UseAgreement. These enable expressing offers,

requests, and agreements from/between AI providers and deployers. To

address the ambiguities around the function of odrl:isA in

the inclusion of “sub-class of” relations, we introduce semantic

equality (aiup:seq) that indicates presence of either

“instance of” or “sub-class of” relations. AIUP allows describing use

policies for AI components, such as general-purpose AI models, by

specifying general concepts of aiup:AIComponent,

aiup:Provider, and aiup:Deployer. However, it

leaves out the inclusion of more specific elements required for

expressing component use policies for future work. AIUP is made

available online at https://w3id.org/aiup under the CC-BY-4.0 license.

AIUP Example

As an example scenario, we consider a policy for an online student

proctoring system called Proctify, previously described in [12].

The conditions of deploying Proctify, as an aiup:UseOffer

policy, are presented in Listing [lst:<aiup-offer>].

For brevity, we only include 3 constraints for describing the intended

domain, purpose, and AI subjects. The offer indicates that the deployer

should provide training to end-users of the system as a control measure

to address the risk of over-reliance on the system’s output.

@prefix odrl: <https://www.w3.org/ns/odrl/2/> .

@prefix aiup: <https://w3id.org/aiup#> .

@prefix vair: <http://w3id.org/vair#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix dct: <http://purl.org/dc/terms/> .

@prefix ex: <http://example.org/> .

ex:proctify-offer-01 a aiup:UseOffer ;

odrl:uid ex:proctify-offer-01 ;

odrl:profile aiup: ;

rdfs:comment "Offer for using Proctify"@en ;

odrl:permission [

a odrl:Permission ;

odrl:assigner ex:aiedux ;

odrl:target ex:proctify ;

odrl:action aiup:Deploy ;

odrl:constraint [

odrl:and [

odrl:leftOperand aiup:Domain ;

odrl:operator aiup:seq ;

odrl:rightOperand vair:Education ] ,

[

odrl:leftOperand aiup:Purpose ;

odrl:operator aiup:seq ;

odrl:rightOperand vair:DetectCheating ] ,

[

odrl:leftOperand aiup:AISubject ;

odrl:operator aiup:seq ;

odrl:rightOperand vair:Student ] ] ;

odrl:duty [

dct:title "User training to address over-reliance" ;

odrl:action aiup:ImplementControl ;

odrl:constraint [

odrl:leftOperand aiup:Control ;

odrl:operator aiup:seq ;

odrl:rightOperand vair:Training ] ] ] . Conclusion

In this paper, we proposed AIUP as a novel technical solution for declaring AI use policies in an open, machine-readable, and interoperable format based on the evolving requirements of the AI value chain, particularly the obligations of the EU AI Act. The AIUP profile supports modelling and comparison of use policies related to AI systems and their components. It further assists AI auditors and authorities in investigation of non-compliance and ascertaining liable parties when investigating claims concerning AI.

This project has received funding from the EU’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 813497 (PROTECT ITN) and from Science Foundation Ireland under Grant#13/RC/2106_P2 at the ADAPT SFI Research Centre. Beatriz Esteves is funded by SolidLab Vlaanderen (Flemish Government, EWI and RRF project VV023/10). Harshvardhan Pandit has received funding under the SFI EMPOWER program.

References

Refers to the uses of an AI system that are prohibited by the provider.↩︎

Prior Publication Attempts

This paper was published after 2 attempts. Before being accepted at this venue, it was submitted to: SEMANTiCS (full paper)