Comprehensive Review and Future Research Directions on ICT Standardisation

MDPI Information

✍ Mohammed Mahdi* , Ray Walsh , Sharon Farrell , Harshvardhan J. Pandit

Description: A large-scale quantitative analysis for papers related to standards to understand how standards play an important role in innovation

published version 🔓open-access archives: harshp.com

Abstract: Standards play a significant role in our daily lives, often to a greater extent than we may realize. The presence of standards plays a crucial role in maintaining the current level of organization in our lives. Standardization is one of the most important factors that has contributed to the development of our modern society. We would struggle to do simple things that we now take for granted. Consider what would happen if train timings and track widths were not standardized, or if we couldn't use our mobile devices once we were out of range of our operators' networks, such as while traveling abroad. We perform a large-scale quantitative analysis for papers dealing with (1) standards and (2) ICT data in three important databases, namely Web of Science, IEEE Explore, and ACM digital library, in this paper. These three databases presented 216 articles that were divided into five categories. The first category includes standard-related review and survey studies. The second category includes papers that managing information across both hardware and software standards. The third category contains papers dealing with energy management standards and measuring the classification performance of machine learning models. In the fourth group, publications are grouped that offer criteria for motivation to develop techniques to aid in the creation of standards for privacy-aware software systems. This is the fifth iteration of a set of standards for health information, and communications technology meant to promote compatibility and interoperability among separate systems. In conclusion, our contribution provides a deeper comprehension of standards and fundamental attributes, as well as an essential foundation for future research. In addition, we demonstrate that standards play an important role in innovation.

Introduction

Standards in this field have become increasingly significant as the use and relevance of computers and computer networks has expanded. Individual and corporate purchasers of information processing equipment and software have begun to demand that the services and equipment they buy adhere to specific standards [1].

Since the late 1990s, there has been a significant increase in standardization efforts within the information technology field, resulting in the creation of a larger volume of standards compared to all other standardization endeavors combined [1].

Standardisation is widely recognized as a crucial factor in facilitating the execution of research and innovation endeavors. Existing standards are commonly employed in a significant multitude of projects to enhance accessibility to cutting-edge knowledge and assist researchers in incorporating the most recent advancements in regulations, industry practices, policies, and technologies [2].

This study emphasizes an additional significant advantage, namely that standardization facilitates the organization of the entire research and innovation process by fostering discussions regarding the specific deliverables of the project. The study also demonstrates that references pertaining to standardisation within the call topics serve as a significant motivating factor in the decision to incorporate standardisation.

In recent years, Information and Communication Technology has received considerable attention. During this time period, it was determined that ICT could assist Standardisation in achieving their strategic objectives. Additionally, academic research has contributed to making Standardisation more efficient and better able to manage its resources[3].

Standards can have an underpinning or connecting function at the level of basic research, such as agreeing nomenclature or basic testing. Standards can help to advance research and innovation by enabling and supporting areas including design, testing, compatibility, and quality [3].

Since this relatively small start, it has taken a long time for ICT standards to be seen as an important part of the information society, but they are now. Also, most people now know that standards are essential to the economy[4], that they help innovation, and that they are necessary tools for transferring technology and knowledge[5], [6].

Standards can have an underpinning or connecting function at the level of basic research, such as agreeing nomenclature or basic testing. Standards can help to advance research and innovation by enabling and supporting areas including design, testing, compatibility, and quality[7].

Technology standardization refers to the systematic process of aligning applications and IT infrastructure with a set of established standards that are in line with the organization’s business strategy, security policies, and objectives. The implementation of standardized technology has been observed to decrease complexity and provide various advantages, including cost savings achieved through economies of scale, simplified integration processes, enhanced operational efficiency, and improved IT support. Additionally, it streamlines the administration of information technology [8].

Standards may encompass national, European, and international levels. Numerous standards initially originate at the national level to cater to local requirements, but subsequently gain broader acceptance across Europe through the involvement of organizations such as CEN, CENELEC, and ETSI. Furthermore, these standards may also achieve international recognition through adoption by ISO, IEC, and ITU-T, thereby facilitating global knowledge sharing and facilitating international trade [9], [10]. National standards in CEN and CENELEC member countries are derived from European standards. Being a fundamental element of the European Single Market, they exert significant influence on the European economy.

Standards are considered to be voluntary in nature, as they are the result of collaborative efforts between professionals from various sectors such as business, industry, academia, and society. These experts engage in a consensus-building and consultation process with a standardization organization to establish these agreements [11]. Standards serve as the fundamental framework for trade and have the potential to foster innovation by facilitating the sharing of knowledge, promoting optimal methods, and ensuring compatibility. They symbolize the principle of equal opportunity, enabling users of varying sizes to participate in fair competition. Voluntary standards possess the capacity to complement regulatory measures.

The primary objectives of this study are as follows (1) investigate

the conventional and recent developments of relevant state-of-the-art

Standardization; (2) understand the role of standardization; (3)

understand the need for standardization to improve quality; (4)

understand the role of standardization requirements and challenges of

the ICT sector; and (5) identify the role of standardisation technology

and its impact on its environment.

Research Questions: The research questions hold

significant importance in determining the search strategy and analysis

when conducting a systematic literature review. The research questions

(RQs) for this study were identified as follows:

RQ1. What does the current research literature reveal about the Standardisation and ICT methods and topic?

RQ2. What are the main goals, vision, and trends for standardisation, and what research may be highlighted in this field?

RQ3. What are the current research gaps in the field of ICT standardization?

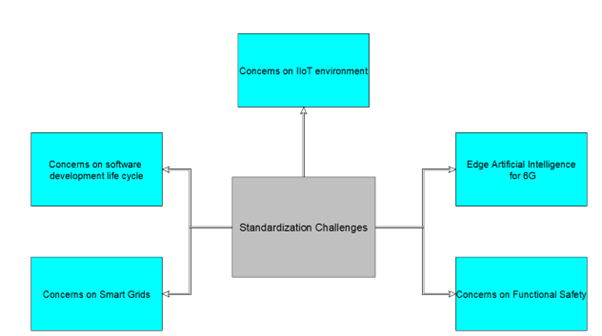

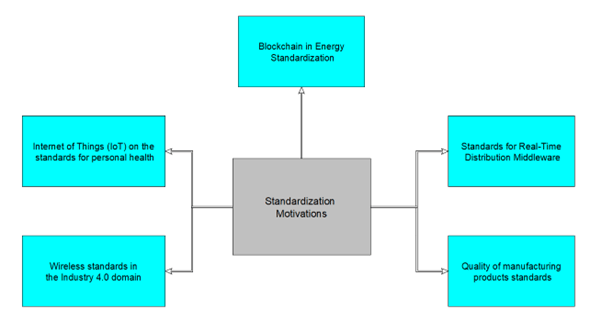

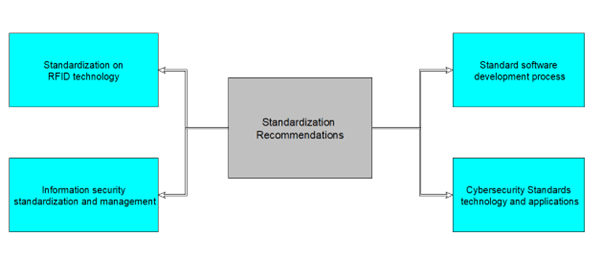

RQ4. What are the current motivations, concerns, challenges, and recommendations for improving the standardization and ICT methods and topic?

RQ5. Can standards play a more prominent role in the attainment of regulatory objectives and the promotion of innovation?

A number of particular study topics helped to further define this goal. These research topics were expanded upon and then utilized as a foundation for the extensive research and analysis tasks completed, as mentioned in the next section.

The principles of Standards are explained and analyzed in 2: of this article. The remainder of this article is organized as follows. The methodology, comprising the information source, standards for research eligibility, the SLR, and the implications of publications’ search results, are all defined in Section Reference Section 3. Along with identifying the research questions (RQs) for this study, risks to validity highlight significant obstacles to SLRs’ efficacy. The literary taxonomy, standardization technology, and ICT were divided into four groups from the queries of any object from three online papers. The survey and review papers based on the most recent state of the art in standardization and ICT describe and summarize Section 4. We present a fresh research prospect for the standardization and ICT areas in sections 5, 6, 7, and 8; Section 9 discussed the motivation, challenges, suggestions, and a contemporary strategy for the standardization and ICT fields. The Conclusion is presented in Section 10 to conclude.

Preliminary Study

This section presents the fundamental concepts of standardisation, institutes bodies, standards catalogues, and other standards.

What is standard?

Standardization is the process of establishing and implementing regulations, criteria, or specifications in order to ensure uniformity, compatibility, and consistency within a particular field or industry. Since there are so many different types of standards (national, regional, and international), industries (agriculture, transportation, nanotechnology, and medical devices), and topics (procedures, products, services, and methods), it’s hard to settle on a single, universally accepted definition of standards and standardization. That’s why it’s impossible to settle on a single definition.

Standards Institutes Bodies

Standards organizations often focus their efforts on specific industries characterized by complex and specific requirements. Develop and distribute comprehensive guidelines for a wide range of academic disciplines. There are several well-known standards organizations that can be identified, namely:

National Institute of Standards and Technology (NIST): is a US government department that contains a massive set of software development and security policies, guidelines, and norms that are constantly updated and publicized.

The International Electrotechnical Commission (IEC): is a global standards body responsible for developing and publishing international standards for all electrical, electronic, and related technology.

International Organization for Standardization (ISO): The organization is a non-governmental international entity that boasts a membership of 168 national standards bodies. The organization is dedicated to the development and publication of International Standards.

IEEE Standards Association (IEEE SA): The organization is widely recognized on a global scale for its establishment of standards. The organization facilitates the development of consensus standards by employing an inclusive approach that involves active participation from industry representatives and a wide range of stakeholders.

ISO/IEC: ISO and IEC develop, promote, and maintain industry standards, focusing on communications, information technologies, and electric and electronics. Some documents are free to download.

What is Standard Catalogues?

We have reviewed the existing standards from multiple standard associations (IEEE AS, ISO, IEC, ISO/IEC). We designed a protocol and followed it to read the standards.

Standard Catalogues are compilations of publications that contain standards linked to a given industry, field, or topic. This Catalogue can be a valuable resource for individuals, organizations, and scholars looking to obtain and use important standards.

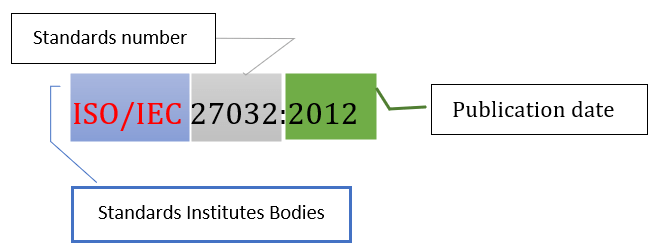

Example: Structure of Standards Institutes Bodies (Standards catalogues) To understand and interpret a standard, you can follow these steps:

A standard is reviewed every 5 years;

Technical Committees are organised through Working Groups, which undertake the standards development;

Identify the standard: Determine the specific standard you want to read. Standards are typically identified by an alphanumeric code or a name that reflects the subject matter or industry they cover;

For example, ISO 9001 is a standard for quality management systems, and IEEE 802.11 is a standard for wireless local area networks (Wi-Fi).

Understand the standards structure catalogues.

The purpose of the subsections is to describe the goal of describing their main qualities. As a result, unless otherwise mentioned, when we speak to "standards" in the following, we mean "catalogues standards."

The international standard that provides access to the standard is: ISO/IEC standards can normally be purchased through the ISO or IEC websites, or they may be available through your organization’s or academic institution’s library;

Understand the structure: The ISO/IEC xxxx adheres to a standardized framework comprising of sections, clauses, and annexes. It is advisable to acquaint oneself with this particular structure in order to efficiently navigate through the document;

Read the introduction and scope: Start by reading the standard’s introduction and scope parts, which explain what the standard is for and what it is used for. This will help you figure out what ISO/IEC xxxx is about and what its goals are.

Table 1 contrasts the current catalogues standards, Standard Title, Standard Status Descriptor, Indicators and Statements.

Other standards.

A "de facto standard," also known as a "standard in actuality," occurs when a successful solution is widely and independently adopted by multiple industries within a market segment, and products made on that basis are universally accepted by customers. Many technologies, products, and services are based on existing standards, therefore formal and de facto standards have an impact on our daily lives. Examples

Example 1: De facto standards have the potential to transition into formal standards when they are officially published by recognized Standards Development Organizations (SDOs). Some examples of these standards include HTML (HyperText Markup Language), which was developed in the early 1990s by Tim Berners-Lee at CERN in Geneva, Switzerland, and is continuously maintained by the World Wide Web Consortium (W3C).

Example 2: Another example is PDF (Portable Document Format), which was created by Adobe Systems in 1993 and subsequently standardized by ISO (ISO 32000, ISO 19005-1:2005).

Example 3: The QWERTY keyboard layout, which is currently the most extensively utilized, was originally patented by Christopher Sholes in 1864. The subsequent iteration of the Dvorak keyboard layout, developed by August Dvorak in 1936, aimed to enhance typing speed. However, due to the entrenched dominance of the QWERTY layout, its adoption was not as successful, despite being natively supported by most contemporary operating systems.

Materials and Methods

As stated in the Introduction, this research employed the Systematic Literature Review (SLR) approach to gather relevant research articles pertaining to two key concepts: "Standard" and "Information and Communications Technology". The collection of research articles was conducted using three reputable digital libraries: (1) Web of Science, which offers a wide range of multidisciplinary research articles in the scientific fields; (2) IEEE Xplore, which specializes in articles related to electrical and electronics engineering; and (3) the ACM Digital Library, which provides a comprehensive database for computing and information technology.

RQ1. What does the current research literature reveal about the Standardisation and ICT methods and topic?

In March 2023, many searches on the three databases were conducted using keywords (or phrases) such as "Standard", "Standards", "de facto standard", "Standardization", "Information and Communications Technology", "ICT", "Software", "Application Development", Apps", "Techniques", "Methods", "Implementation", "Guide", and "Algorithm". The keywords differed relatively slightly. Following that, the conjunctions "OR" and "AND" were used to link these terms, which were then followed by "Refining Information."

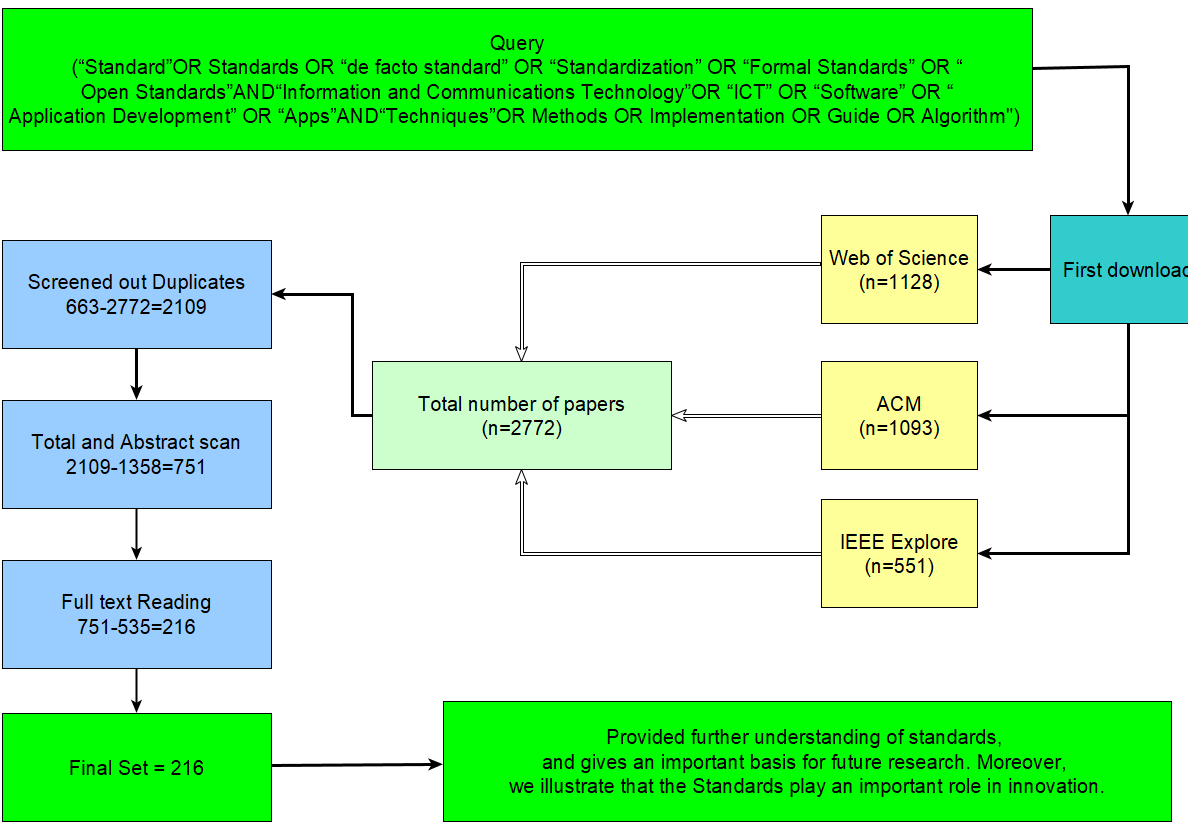

Figure 2 depicts the search queries utilized in this experiment. Some results were removed because they were letters, magazine pieces, or book chapters. The primary purpose of this exclusion was to gather the most up-to-date scholarly literature on the Standard difficulties and opportunities. The results were then separated into two categories: (1) general and (2) coarse-gained. The latter is discussed in five succeeding sections derived from the study findings, where Google Scholar SE was used to establish the study’s direction.

2

The importance of the collected articles was assessed in order to keep the most relevant articles from a huge number of collected academic papers. Furthermore, the included articles were classified using two criteria: (1) initial screening to find relevant results; and (2) three iterations in the filtering process to remove redundant and duplicated articles.

As previously stated, an article was excluded if it did not meet the following selection criteria: (1) the English language was not used to write the paper; (2) Standard and/or Standardization were the focus of the paper; and (3) the research interest in the paper was only focused on Standard without Information and Communications Technology. Furthermore, after the second exclusion cycle, the articles could be removed if: (1) the contribution of the paper did not consider any aspects of Standard on Information and Communications Technology; or (2) the discussion in the paper was solely focused on Standard on Information and Communications Technology and did not consider any other topic.

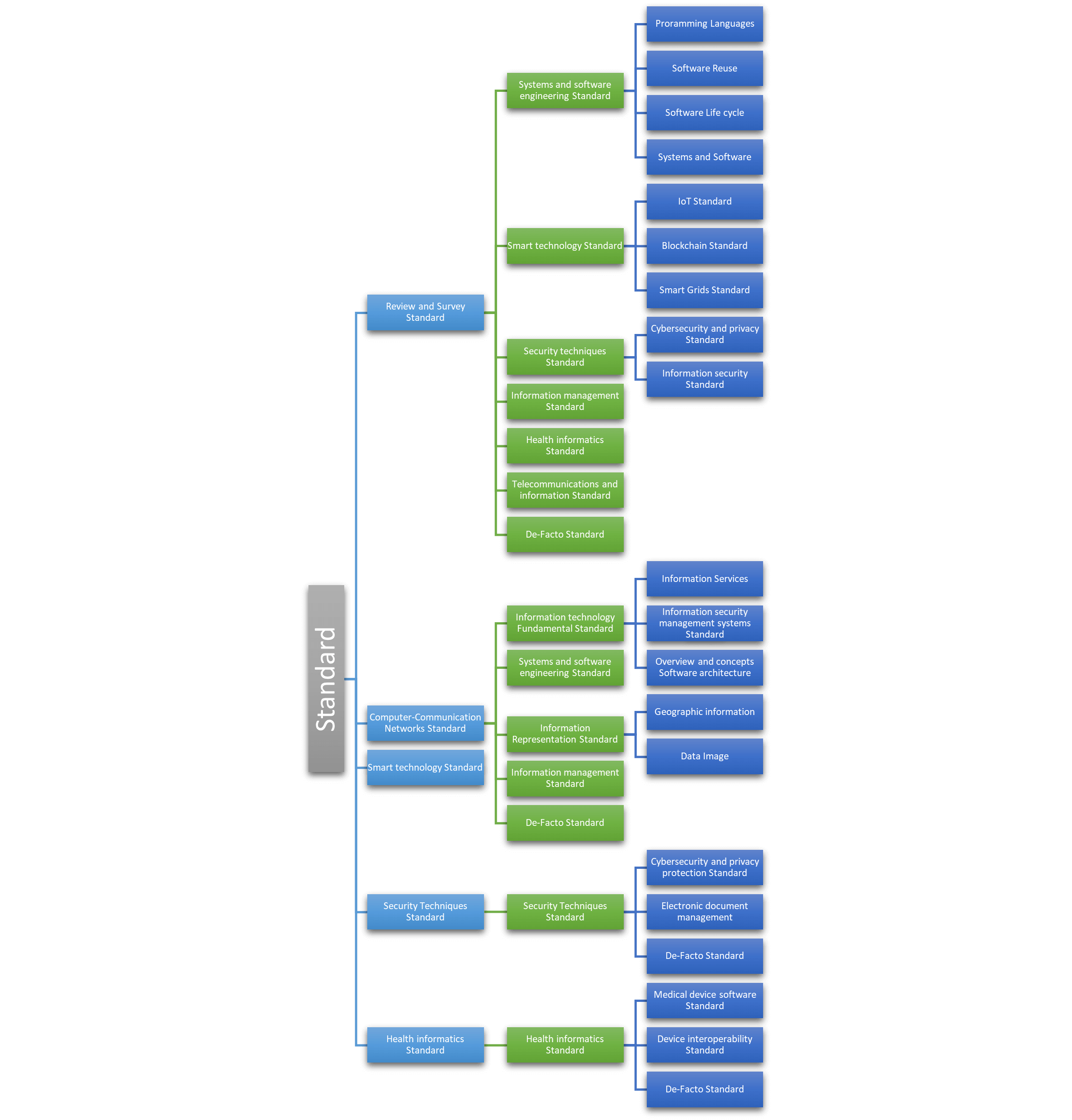

In this effort, papers were filtered extensively before being classified into five groups based on the proposed methodologies for and future research plans on standardisation. The categories were: (1) review and surveys, (2) computer communication networks Standard, (3) smart technology standard, (4) security techniques standard, and (5) health informatics standard. Subsequently, further subcategorization was performed according to the authors’ writing and presentation of the articles to readers.

Our findings are shown in Figure 3, where we found 2772 research papers based on user searches, of which 1128 were found in WOS, 551 in IEEE, and 1093 in ACM digital libraries. The chosen articles were all released between 2013 and 2023. Then, these articles were split into three groups: (1) the 663 redundant articles; (2) the 1358 irrelevant articles based on the titles and abstracts; and (3) the 216 articles that met the standards for ICT search criteria.

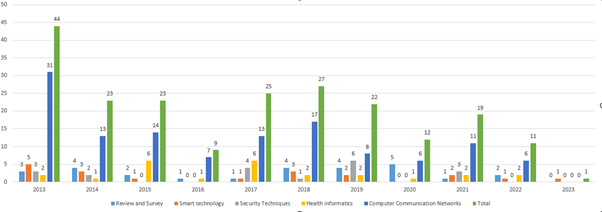

The statistics for the various categories listed above for the articles about ICT standardization are shown in Figure 4. In the figure, it can be seen that the 216 articles from the three databases were divided into review and surveys (27), computer communication networks standard (126), smart technology standard (19), security techniques standard (19), and health informatics standard (25).

Figure 4 depicts the statistics for the publications published between 2013 and 2023. The figure depicts the number of research articles published in each of the five categories for each year. It can be noted that forty-four papers were published in the early years, such as 2013. Between 2014 and 2018, the figure gradually climbed, rising from twenty-five in 2018 to twenty-seven in 2019. From 2020 onwards, the number of publications remained stable until 2023, when the number of publications reached 32 research articles. This demonstrates a growing study tendency toward the Standardization notion.

We created a Standardization taxonomy representation of the current literature in light of comprehensive Standardization, which has been produced in recent years, as shown in Figure 5. The presented approach consisted of several Standardization, including Systems and software engineering in Standard, Computer-Communication Networks Standard, Smart technology Standard, Security Techniques Standard and Health informatics Standard. explaining the proposed system in detail with the help of a block diagram:

2

Review and Survey Articles

Recently published survey and review articles have provided a comprehensive description and summary of the current state-of-the-art in standardization and its applications. The research reviewed the technological hurdles and standardisation considerations. This category’s twenty-seven articles were broken into seven subcategories. Their representative surveys of these standardisation studies are described and discussed below:

RQ2. What are the main goals, vision, and trends for standardisation, and what research may be highlighted in this field?

Systems and software engineering standard

The analysis and research documentation provides an overview of the current understanding of standardization in systems and software engineering technology readiness.

Programming languages standard

A programming language standard is a formal document that specifies the syntax and semantics of a particular programming language. Typically, when discussing real-world languages, this document entails a detailed explanation using easily understandable language rather than formal semantics expressed in mathematical terms.

Two articles[12], [13] were presented that conducted a survey on real-time distribution middleware, focusing on its adherence to standards and its impact on the industry. The objective of these articles was to offer technicians and domain experts a comprehensive understanding of this field, while also highlighting important unresolved matters and suggesting potential avenues for future research. It also discusses the preliminary measures implemented in the utilization of distribution standards in high-integrity systems. The survey concludes by providing a comprehensive analysis of the advantages and disadvantages associated with these various approaches. It also highlights any unresolved matters, significant challenges, and potential avenues for future research.

Other articles [14] provided a more detailed account of the early history, placing a significant emphasis on hygienic macros. These articles then proceed to chronicle the subsequent advancements, widespread adoption, and profound impact of hygienic and partially hygienic macro technology in the context of Scheme. The story prominently explores the dynamic relationship between the inclination towards standardization and the advancement of algorithms.

Software Reuse standard

Other subjects in this section Standardization of software reuse results in increased reliability, productivity, quality, and cost savings. Current reuse strategies focus on the reuse of software artifacts, which is based on projected functionality, but the non-functional (quality) side is also significant.

An article [15] was published that provides an expert review of a framework that has been developed for the implementation of a novel testing approach known as taxonomy-based testing (TBT) in the domain of medical device software. This framework presents three methodologies for implementing TBT and has undergone validation by experts from the software testing industry and the medical device software domain.

A comprehensive analysis was conducted on multiple software engineering standards issued by the institute of electrical and electronics engineers standard association[16]. An investigation was conducted on software engineering standards to identify the factors and sub-factors that may contribute to diverse situations among members of software development teams. Consequently, a total of 12 factors, along with 52 sub-factors, have been identified as potential contributors to diverse situations among members of software development teams.

Software life cycle processes standard

The implementation of standardized system lifecycle processes as a means of enhancing efficiency is centered on attaining a consistent level of delivery at the enterprise level throughout every stage of the lifecycle. Furthermore, a standardized approach to system lifecycle management not only offers a structure for effectively overseeing individual systems-related tasks, but also enables the organization to gain comprehensive insight into the utilization of system-related resources, financial aspects, and project progress at an organizational level.

Two articles [17], [18] have been conducted on the topics of governance, management, conceptualization, evaluation, elaboration, and enablement. The set consists of 45 activities, each containing a specified number of tasks ranging from five to eight. This standard can serve as a fundamental basis for organizational training and guidebooks pertaining to architecture management and development. A preliminary version of the model’s architecture and its components has been constructed, yielding partial results. The model has been developed based on key references and best practices identified for Agile Governance during the course of this research.

The author of the study [19] employs a SLR methodology to identify and analyze the strategic process improvement (SPI) initiatives within small and medium-sized enterprise (SME) software firms. The findings indicate that a significant proportion of software process improvement initiatives within small and medium-sized enterprises (SMEs) are implemented in the regions of America and Europe. The SPI initiative was exclusively considered in Hong Kong, a country located in Asia, where the CMM Fast-Track model was developed. Numerous proposals have been put forth regarding Software Process Improvement (SPI) initiatives for small and medium-sized enterprise (SME) software firms.

Systems and software Quality Requirements and Evaluation (SQuaRE) standard

The topics covered in this document include general guidelines, planning and management, system and software quality models, data quality models, quality measurement frameworks, quality measure elements, framework evaluation processes, evaluation guides, evaluation modules, testing, and common industry formats.

An article [20] has proposed a software evaluation method that relies on users’ feedback, utilizing the data stored in the help desk’s database system. The procedure involves analyzing the help desk’s inquiries during a designated time frame, identifying the root causes of issues, and suggesting enhancements to the product and methodologies that could mitigate similar inquiries in the future. The analysis is conducted using the ISO9126 quality model and incorporates the utilization of ISO 31010 tools to identify the underlying causes of system issues within the production environment.

Smart technology standard

The term "smart" pertains to the various formats, processes, and tools that are essential for facilitating user interaction with standards. This interaction can involve both human and technology-based users. These digital solutions cater to the requirements of various stakeholders, including industry players, regulators, end users, and society.

Internet of Things (IoT) Standard

The IoT has challenges due to the lack of standards because it makes it challenging for various systems and devices to collaborate and communicate effectively. It is difficult to create a universal standard that functions across all IoT devices since they can employ a wide range of communication protocols and data formats and are produced by numerous different vendors.

This article [21] focused on strategies to improve digitization and standardization within the context of Industry 4.0. This review examines both traditional and recent advancements in IIoT technologies, frameworks, and solutions that aim to enhance interoperability among various components within the IIoT ecosystem. Also engaged in discussions regarding various interoperable IIoT standards, protocols, and models that can be utilized to digitize the industrial revolution.

Blockchain standard

Blockchain is a decentralized, immutable database for tracking assets and transactions in corporate networks. It enables the recording and trading of value, reducing risk and increasing efficiency. This paper summarizes blockchain standardization.

An article [22] was presented that offers a comprehensive review of grid and prosumer blockchain applications, while also highlighting the standard development activities conducted by the IEEE SA. The review indicates that blockchain technology in the energy sector is in a state of maturation and holds potential for application across various domains within the power and energy industry. It has the capacity to facilitate the advancement of diverse modern grid technologies.

Smart Grids standard

A smart grid is an electricity network that monitors and manages the conveyance of power from all generation sources in order to satisfy the shifting electricity demands of end users. The number of successful smart grid initiatives continues to expand, with each project ranging in scale and complexity.

Articles [23] on using IEC 61850 with the IEC 61499 reference model for distributed automation and creating a Smart Grids Compliance Profile. The IEC 61850 power utility automation standard ensures strong interoperability, which Smart Grids and its devices and parts need.

Security techniques standard

The goals of standardization are uniformity, tracability, and repeatability. By always using the same security methods across all work, security knows what has been protected and what hasn’t. This lets them know if they need to take extra steps and lets them know if there are any exceptions. Standardizing security policies can take many forms, such as legal compliance, access controls, acceptable use policies, security as code, and automation, to name a few.

Cybersecurity and privacy protection standard

A cybersecurity standard refers to a collection of guidelines or best practices that organizations can adopt in order to enhance their cybersecurity stance. Organizations have the opportunity to leverage cybersecurity standards in order to effectively identify and implement suitable measures for safeguarding their systems and data against potential cyber threats. Standards can also offer valuable guidance regarding the appropriate actions to take in response to and mitigate the impact of cybersecurity incidents.

One article [24] described the outcomes of implementing different lattice-based authentication algorithms on smart cards and a microcontroller often found in smart cards. Only a handful of the proposed lattice-based authentication protocols may be implemented utilizing the restricted resources of such small devices; however, cutting-edge ones are sufficiently efficient to be employed practically on smart cards.

This paper [25] presented a comprehensive review of the key attributes of wireless communication technologies in the context of Industry 4.0. Additionally, it examines various security vulnerabilities and concerns that exist within this sector. Additionally, a concise summary was provided for each of the industrial revolutions, along with an emphasis on the primary technological distinctions that set them apart.

Information security management systems standard

Data security standards refer to a set of guidelines or criteria that organizations can adhere to in order to safeguard sensitive and confidential information. These standards are designed to mitigate the risk of unauthorized access, use, disclosure, disruption, modification, or destruction of data.

The aim of this study [26] is to evaluate and analyze various methods and standards in secure software development. By conducting a survey and comparison, the objective is to identify specific stages within the software development life cycle (SDLC) where one or more of these methods or standards could be implemented to enhance the security of a software application throughout its development process.

Information management standard

The purpose of this Information Management Standard is to establish fundamental principles and obligations that must be adhered to in order to achieve efficient Information Management. The purpose of this system is to ensure that information is created, managed, retained, and disposed of in a manner that aligns with appropriate protocols and guidelines.

An article [27] was identified that discusses the capabilities and requirements necessary to ensure information quality in the context of reducing vulnerabilities in DAECO. This study incorporates the theoretical frameworks of resilience, information quality, and vulnerability, as well as the established standards of ISO19650 and ISO27001.

An additional study [28] was conducted, which presented a SLR consisting of 47 carefully chosen user studies for the field of end-user software engineering (EUSC). The article employs a review framework to systematically and consistently evaluate the focus, methodology, and cohesion of each of the aforementioned studies. The results have contributed to the creation of a design framework and a series of inquiries for the purpose of designing, reporting, and evaluating effective user studies for EUSC.

The paper [29] introduced and summarized their work in the IVHM/PHM standard structures and standards. Many standard organizations in the IVHM/PHM domain are eager to build their standard systems for various purposes and applications, and they have formed various standard structures. The most important organizations are ISO, SAE, ARINC, and IEEE.

Health informatics standard

Assist in ensuring the safety, usability, and appropriateness of health-specific applications utilized by clinicians, patients, and citizens. Interoperability is advocated for the purpose of facilitating accurate interpretation and utilization of electronically communicated information across systems, thereby enabling effective decision-making, continuity of care, and other relevant objectives.

According to one publication [30], support for graphical-based assurance case assessment is included in 10 assurance case tools, all of which have remarkable assessment capabilities. More specifically, by studying the evaluation techniques supported by the tools, three kinds of methods for reviewing an assurance case’s structure and five categories of assistance for assessing an assurance case’s content were discovered.

The purposed of this study [31] is to conduct a SLR that examines the level of support provided by current standards, specifically ISO 13485:2016, ISO 14971:2012, IEC 62304:2006, and IEC 62366:2015, as well as current reference architectures for medical device software, towards ISO/IEC 25010:2011 and the 4+1 views.

This article [32] aimed to explore the topic of ensuring safety in product lines and identifying potential gaps in existing research. Perform a SLR on research publications published up until January 2016. The task at hand involves the identification of 39 research articles that are to be included in a comprehensive list of primary studies. The objective is to conduct an analysis of how product lines are documented within these articles, with a particular focus on the safety-related topics that are covered and the evaluation methods employed by the studies.

Two articles [33], [34] provided a comprehensive analysis of currently employed software-based tolerance techniques that effectively safeguard the system against soft errors. The aforementioned techniques are subsequently linked to the software requirements mandated by EN 50128, which serves as the software standard within the railway industry, as well as those mandated by IEC 60601-1, the overarching standard for the medical field. The detection techniques have been demonstrated to facilitate compliance for both EN 50128 and IEC 60601-1.

Telecommunications and information standard

The integration of voice, data, video, and wireless technologies is occurring at a rapid pace within the current converged communications marketplace. As an increasing number of organizations integrate into the dynamic communications network, they swiftly recognize the significance of standardization, planning, and ongoing improvement as essential prerequisites for ensuring functionality, interoperability, and reliability.

A study [35] was conducted to examine the utilization of mmWave communication and radar devices for in device-based localization and device-free sensing, with a specific emphasis on indoor deployments. This document provides an overview of the key concepts related to mmWave signal propagation and system design. It aims to detail various approaches, algorithms, and applications for mmWave localization and sensing.

Another article [36] has presented a survey regarding the significance of both traditional and modern telecommunication management networks (TMN) and ICT management. Additionally, it is necessary to categorize all prominent networks and technology management systems that are currently recognized, with the purpose of establishing a framework for the design of network management architectures.

De-Facto Standards

In one article [37], the state of computing is surveyed in relation to 3D virtual spaces, and the steps necessary to create a network of 3D virtual worlds, or Metaverse, that provides a compelling alternative realm for human sociocultural interaction, are outlined. Convergence on the network protocol used by Linden Lab for Second Life as a de facto standard has enabled decentralized development, which in turn has led to the decoupling of the client and server sides of a virtual world system.

This article [38] presented a comprehensive survey that focuses specifically on algorithmic and combinatorial aspects. The majority of routing protocols ensure the achievement of convergence towards a stable state of routing. In the event that there are no alterations to the topology or configuration, it can be observed that each router will ultimately establish a consistent route to any given destination. However, this assertion does not hold true for policy-based routing protocols, such as the Border Gateway Protocol (BGP), which is widely accepted as the standard for interdomain routing.

Computer-Communication Networks standard

The second category included 126 related to the objective is to ensure compatibility between hardware and software from various vendors. The development of networks that facilitate seamless information sharing would be significantly challenging, if not unattainable, in the absence of established networking standards. Standards also ensure that customers have the flexibility to choose from multiple vendors.

Customers have the flexibility to procure hardware and software from any vendor, as long as the equipment adheres to the established standard. Standards play a crucial role in fostering increased competition and maintaining price stability.

Information technology Fundamental standard

Information technology standards encompass a comprehensive range of both hardware and software standards. The significance of software and information formatting standards is steadily growing. There are established standards for operating systems, programming languages, communications protocols, and human-computer interaction. An illustration of this would be the necessity for standardized protocols in order to facilitate the global exchange of electronic mail messages. These protocols encompass the establishment of addressing conventions, formatting guidelines, and efficient transmission methods.

Information Services standard

This section outlines the necessary requirements that a service must meet in order to demonstrate its suitability for the intended purpose. The standard may encompass various components, including definitions, indicators of service quality, and their corresponding levels, as well as stipulations regarding the timeframe for service delivery. An example of such a standard is one pertaining to the management of customer complaints.

Presented Warbler family, a pseudorandom number generator family based on NLFSRs with acceptable randomness[39]. The security analysis demonstrates that the proposed instances can pass the cryptographic statistical tests recommended by the EPC C1 Gen2 standard and NIST, as well as resist cryptanalytic attacks such as algebraic attacks, cube attacks, time-memory-data tradeoff attacks, Mihaljevi et al.’s attacks, and weak internal state and fault injection attacks.

One paper [40] described software for analyzing 3D-scanned electronic geometric models. ISO 8559-1 gives specialized information equivalent to national regulatory texts. Briefly discuss basic information completeness and presentation needs.

Another reason is the lack of thorough test data and the flexibility of these procedures. Based on extensive outdoor test data, used software scripts to evaluate the performance of these three standard translation methods recommended under IEC 60891, where the reference conditions have been optimized to produce the best result in different irradiance and temperature ranges[41].

A study [42] has been conducted to implement a two-level warning system that aims to provide cautionary and imminent warnings when a driver intends to perform a lane change. The experimental findings indicate that the in-vehicle safety warning system effectively prompts drivers to reconsider their decision to change lanes. This is achieved through the use of visual and audible warnings, in accordance with the ISO17387:2008 standard.

Two articles [43], [44] used the CPM/PDD to study UCD. Many user-centered design methodologies are based on the international standard 9241 210. It investigates the mapping of the two models in order to acquire support and insights for selecting methodologies and metrics for the creation of interactive systems. Also detailed was the creation of a PHR-S for MS management based on the concepts and recommendations of the ISO 9241-210 standard.

Electronic document management standard

Managing information across the organization aids in the support of a variety of new technologies that produce electronic records standards, and record generation can be both active and passive (e.g., automated logging of system upgrades). Individual records standards can be established via electronic mail systems, as web-based publications, or as documents published and held in administrative information systems.

One study [45] offered findings concerning standard specifications and their implementations in open source software. Specifically, the analysis focuses on extensive insights and experiences connected to two open source projects that implement the PDF format standard.

Another paper [46] on the finite element method using CST Simulation software to design a GTEM cell before it is put into use has been presented. After the GTEM cell was put into place, a 3-axis electromagnetic probe was used to test how regular it was. According to the IEC 61000-4 and IEEE 1309-2005 standards, the testing area and volume of the object being tested inside the GTEM cell have been shown.

Overview and concepts Software architecture standard

This standard outlines the specifications for exception conditions and their corresponding default handling procedures. A floating-point system that adheres to this standard can be implemented using software, hardware, or a combination of both.

The proposed [47] methods for floating-point arithmetic are built on the IEEE 754-2008 standard. These methods are a mix of software and hardware. Software that makes good use of space is used to create multiplication. Based on platform analysis, the proposed approaches can reduce execution time by 24%-81% compared to a typical software library and utilize 25%-94% less hardware than an area-efficient full decimal multiplier.

Two publications [48], [49] on the floating point arithmetic cores offered by major FPGA manufacturers are not completely compliant with IEEE 754 standards. These circuits cannot accommodate non-normal numbers. Also described the implementation of a single precision floating point adder compliant with IEEE 754 that accepts denormal inputs. In addition, compare its performance and resource consumption to that of the Xilinx floating-point adder IP core.

Systems and software engineering standard

A software engineering standard can be characterized as a standard, protocol, or analogous document that delineates the regulations and procedures governing the development of software products. A conventional software development company typically maintains a collection of documents that are exclusively intended for internal use within the organization.

Programming languages standard

Standards are measurable criteria that everyone agrees to use so that they can communicate with each other. Some people think that the first standards were monetary systems that were made to help people trade things. A language is a way to talk to each other. The alphabet is a common way for people to share knowledge.

One document [50] adaptations of extant approaches to dialog act recognition proposed in order to address the hierarchical classification issue. Specifically, Proposed end-to-end hierarchical network predicts communicative function, maintains dependencies, and determines stopping level using cascading outputs and maximum a posteriori path estimation.

A recent study [51] introduced a modeling language that utilizes the ISO/IEC 24744 metamodel. This language effectively represents textual information in a well-structured format, allowing for the description of semantic relationships within the discourse. Furthermore, the paper presents an application of the proposed language in the field of requirements engineering, demonstrating the advantages of implementing the suggested approach and its potential in various textual domains.

A recent study [52] SystemC, IEEE 1666-2005 standard for embedded system modeling, architectural exploration, performance analysis, software development, and functional verification, has received SA approval for RTL simulation.

Software Reuse standard Standard

The objective was presented a set of standards for software development frameworks that enable the creation of secure and high-quality metamodels for software development methodologies. Additionally, this aims to emphasize the alignment between each phase of software development and the relevant standards and regulations.

One publication [53] Proposed techniques for discovering and extracting process models from legacy databases, laying the theoretical foundation for a model-driven framework and proposing gPROFIT, a machine learning tool for real-world application.

Another paper [54] gave an overview of TDL and talked about how it could be used in a train use case. It lets you describe events at a higher level of generality than you can with programming or scripting languages. Also, TDL can be used to describe tests that come from other places, like simulators, test case generators, or logs from previous test runs.

Two papers [55], [56] have proposed applying the principles of software reliability engineering to commercial software creation. establish a number of additional steps for ensuring software stability at each stage of the SDLC. in addition, utilized models and metrics for gaining access to and analyzing software product reliability. These are founded on universally accepted criteria for ensuring the safety of computer programs.

Software life cycle processes standard

The software development lifecycle (SDLC) is the method that development teams use to design and build high-quality software in the least amount of time and for the least amount of money. The goal of SDLC is to plan ahead to reduce project risks so that software meets customer standards during development and afterward. outlines how to set up a common framework for software life cycle processes with well-defined terms that the software industry can use as a guide.

In one study [57], a design framework called TooMVBC was proposed to turn a formal, verified computation model into executable MVBC code. TooMVBC uses the formal computation model MVBChart to get a high-level idea of what the MVBC is. Apply TooMVBC to design MVBC with the highest class 5, which is what the standard IEC 61375 says to do. During formal verification of the built system model, several important ambiguities or bugs in the standard are found.

Another paper [58] described a system for modeling software development processes that ensures standard compliance from specification to execution. From specification to enactment, there is no (semi-)automatic technique to ensure de-facto processes meet de-jure norms.

Third paper [59] ISO/IEC 25040-aligned evaluation approach, activities, and artifacts for serious gaming quality evaluation. Serious Game Quality Assessment Method (SG-QUAM). It also supplied a usability and portability model matched to the ISO/IEC 25010 standard and tailored to this area, as well as quality in use when players use the application.

This study [60] offered a viewpoint-oriented strategy for developing Industry 4.0 applications to better adapt system models to stakeholders and concerns. The technique uses existing or developing systems and software engineering (ISO/IEC/IEEE 42010:2011) and Industry 4.0 (RAMI 4.0, IEC 61131-3) standards. The study then models and implements views, including an industrial use case.

Author [61] introduced Substation Data Processing Unit for mapping entities between power systems and communication networks using IEC 61850-based modeling.

IEEE standard 1459 uses voltage and current signals to identify power quantities, using NCA, MRMR, and CST for feature selection, reducing computational time and improving classification results[62].

Two publications [63], [64] assessed software quality, an important computer software research topic. International Organization for Standardization for understanding, measuring, and assessing software quality. Standardization improves software quality prediction and understanding. Introduced international quality standards development and focused on software quality development trends.

Five [65]–[69] small software development companies clustered in a process improvement program implemented the ISO/IEC 29110 standard. A series of papers reported the findings and lessons learned. The ISO/IEC 29110 engineering and management manuals are simple and free, which has encouraged their acceptance. 17+ nations teach ISO/IEC 29110. VSEs may show local and international customers and partners their expertise via low-cost independent certification and assessment methods. This study shows practical frameworks for each Software Implementation activity, including inputs, outputs, procedures, and limitations. ISO/IEC29110 is a lightweight project management and software implementation standard.

Four [70]–[73] examples CERTICSys supports process assessment utilizing ISO/IEC 15504 Standard. CERTICS evaluates and certifies Brazilian software developed and innovated. The NBR ISO / IEC 9126 standards were used to evaluate software, and the ten golden guidelines for medical education software were described. Use SimDeCS to test this method.

Some papers [74]–[78] Use ISO/IEC standards like 9126, 14598, and 15939 to create software that integrates the theoretical elements of these standards with the practicalities of software maintenance while creating a software application. The main results were a set of uniform processes and procedures and a Process Asset Library to support good project management practices in participating companies.

Systems and software Quality Requirements and Evaluation (SQuaRE) Standard

Describe "how the software product was created" and "how it works" in addition to its functional aspects. General guidelines, planning and management, system and software quality models, data quality models, quality measurement framework and elements, framework evaluation process, evaluation guide, evaluation module, testing, and standard industry format are all covered.

Two papers [79], [80] suggested boundary scan. IEEE1149.1 boundary scan test standard in ICs helped miniaturize PCB assembly. These papers discussed JTAG boundary scan test solution applications and MPU JTAG Provision development. Examined how students, instructors, and professional developers regard code quality, including which features they value most.

Three studies [81]–[83] explored numerous quality aspects in a comprehensive model influenced by the ISO 25010, 42010, and 12207 standards for software quality, architecture, and process, respectively. Present an ISO/IEC 25010-based quality methodology for RPG software maintainability evaluation. Show a case study of how a mid-size software company with over twenty years of RPG application development implemented the quality model as a continuous quality monitoring tool into its business processes.

Four papers [84]–[87] reference IEEE STD 1061-1992 and ISO/IEC 25010:2011 SQuaRE software quality models. Software engineering and game quality models determined game quality. Several case studies use game testing. Case studies showed how to evaluate games for relevant results. Game makers can improve games using game quality outcomes, according to studies.

Another study [88] examined the gap and proposed an object-oriented design paradigm for information security. The above-classified design patterns don’t provide a path or standards to build an information system that meets confidentiality, integrity, and availability requirements.

This study [89] IEEE 729-1983 Standard defines software quality as "the set of features of the software that determines how well it will meet the needs of the customer when it is used." This research presents an ACO strategy that intensifies search around the metric neighborhood to increase software quality estimate model prediction accuracy. Implementation and positive results comparisons are presented.

This paper [90] anticipated E/E system functional expansion. overviews COSMIC ISO 19761. Functional size can estimate development work, manage project scope modifications, benchmark productivity, and normalize quality and maintenance ratios.

The essay explored constructing a fuzzy inference rule basis to assess mobile app quality. The solution uses the major provisions of the PNST277-2018 standard "Comparative tests of mobile applications for smartphones" [91].

Software interfaces Standard

The user interface is an umbrella term for all of the parts of a computer system that the user can see and interact with while using the system. info based on Standards To describe it, you need to think about both the fixed parts, like the layout of the keyboard, panels, and reports, and the moving parts, like how programs should react to the user’s actions.

In one paper [92], the EN 50128 standard for software development for the European railway industry was used to look at agile practices for the first time. The study adds to what has already been learned about using agile methods in other controlled areas.

Information Representation Standard

The comprehension of various data types and the utilization of diverse representation techniques align with the established Standard and prove highly advantageous within the field of archaeology. This is particularly relevant as temporal knowledge in this domain often originates from multiple sources and is presented in various formats.

Geographic information Standard

Within the field of geographic information, these standards may define data handling methods, tools, and services. People think that data management means getting, processing, analyzing, accessing, displaying, and posting data for users and systems.

One publication [93] based on ISO Standards 19107 and 19108, which give spatial and temporal properties for geographic features, defines a spatio-temporal model for archaeological data. In order to implement interoperability, the article proposes a spatio-temporal conceptual model for archaeological data based on ISO Standards of the 19100 family and suggests using GeoUML.

After the derivation procedure, another publication [94] provided a framework using ISO 19107 to represent and infer temporal provenance data. Fuzzy Logic was used to assign a degree of confidence to values and Fuzzy Temporal Constraint Networks to model relationships between dating of different findings represented as a graph-based dataset. Archaeological data, especially temporal data, are often vague because many interpretations can coexist.

Data Image Standard

The establishment of a data compression algorithm involves the development of optimized data. The primary factors that impact the accuracy of this algorithm include the resolution of the image, the level of image noise, and the adherence to quality and information standards. and the artifacts that are inherent in the imaging system.

This study [95] presented the development of FaceQvec, a software component designed to estimate the conformity of facial images with the criteria outlined in ISO/IEC 19794-5. This international standard provides general quality guidelines for face images, determining their suitability for official documents like passports or ID cards.

Information management Standard

The present Information Management Standard delineates fundamental principles and obligations that must be adhered to in order to achieve efficient Information Management. The primary objective of this system is to ensure the proper creation, management, retention, and disposal of information.

Data quality Standard

Establishing the principles of information and data quality and explaining the path to data quality can be used to manage the quality of digital data sets. This includes both structured data saved in databases and less structured data like images, audio, video, and electronic documents.

One article [96] estimates ICT system IQ. Recent publications and ISO information quality standards were examined. Due to the limits of existing IQ estimation approaches, the authors describe their own proprietary concept based on multidimensional and multi-layer modeling and uncertainty estimation.

Another article [97] proposed a custom user-centered web-based system based on lab requirements. By defining norms, standard processes, and procedures, supporting quality control and teamwork, and aiding project management, the implementation system improved efficiency and quality. conclusion that while it is a small niche market for major manufacturers, a tailored LIMS standard is crucial for heritage science lab administration worldwide.

Quality management Standard

The following are essential elements that products, services, and processes must consistently adhere to in order to ensure that their quality aligns with expectations: requirements, specifications, guidelines, and characteristics. The items in question are suitable for their intended purpose. They effectively cater to the requirements of their users.

One paper [98] suggested process harmonization across remote sites as a "Best Practice" for similar organizations. A process for developing new tools and choosing a standard set was also established. CT DC achieved common KPIs, training, customer management, and career landscape with shared roles and responsibilities.

Four papers [99]–[102] wanted to show an ontology called SRMO, which tries to bring together all the terms and ideas related to RM and give a more complete picture of risk. This paper gives three examples of how this theory can be used in the field of software engineering to show how useful it is. The SRMO was made by analyzing, comparing, and combining the terms for RM that are used in different industry-wide standards, frameworks, and models, such as ISO 31000, COBIT, PMBOK, and CMMI. More important than anything else is the quality of software. Software quality traits show what software is all about. Quality management of the software creation process is an important way to solve the software quality problem. There are already a lot of standards for software quality management, like ISO 9001 and CMMI, which can help businesses set up a quality management system.

Structure of management Standard

A management system is a collection of procedures that an organization must adhere to in order to achieve its objectives. A management system standard offers a structured framework for establishing and managing a management system.

One paper [103] It is difficult to assess the maturity of agile adoption. The first step in adopting agile methodologies into automotive software product lines is to evaluate the combination. Create the ASPLA Model to assess agile software development using ISO 26550 based on an interview study with 16 participants and a literature analysis.

Risk assessment techniques Standard

Offered assistance in the selection and implementation of systematic methodologies for the evaluation of potential risks. This study employs a variety of methodologies to identify and comprehend risk. The recent update has been implemented to broaden the scope of its applications and enhance the level of detail provided.

One paper [104] on a package is made better by the addition of a graphical tool for predicting the collection area. This tool gives the user a way to draw the structure, no matter how complicated it is. The software was tried on the National Telecommunications Corporation building, which is the tallest building in Sudan. Compared to Furse’s StrikeRisk v5.0, the package outperformed in calculating risk components and total risk.

Telecommunication Standardization

Today’s converged communications market is merging voice, data, video, and wireless technologies. As more firms join the dynamic communications network, they recognize that standardization, planning, and continuous development are essential for functionality, interoperability, and reliability.

Telecommunication Standardization Sector defines the basic transport and access technologies that power global communications networks. Standards power modern wireless, broadband, and multimedia technologies.

Wireless Networks Standard

Wireless standards encompass a collection of services and protocols that govern the behavior of Wi-Fi networks and other data transmission networks.

One paper [105] In terms of time and energy usage, this paper re-examines the issue of tag identification in UHF RFID systems. Previous tag reading procedures have been thoroughly evaluated and analyzed in this work. Discuss a new design of a tag reading algorithm based on prior art to improve the time and energy efficiency of the ISO/IEC 18000-7 EPC C1 Gen2 UHF RFID standard.

Three papers [106]–[108] have made a lot of progress in using RFID technology in libraries over the last ten years. ISO 28560 is a new standard that aims to provide a shared platform for an industry that has grown up while different implementers have come up with their own ways to store RFID data. RFID standards like ISO 14223, 14443, and 15693, which deal with air interface protocols, were mostly made before they were put into use. However, standards like ISO 28560, which deal with standardizing tag data models, were made after implementations had reached a certain level of maturity.

IEEE 802.15.4 is another paper [109] The study investigates the benefits of SDN in infrastructureless wireless networking systems like W-PANs and the requirements for its use. SDWN, a complete SDN solution, is introduced and a prototype implementation described.

Two papers [110], [111] A framework for multipatient positioning in a wireless body area network (WBAN) utilizes spatial sparsity and FFT-based feature extraction to track patients’ movements and report to a central database server. This compressive sensing theory provides excellent precision, detail, and low computing complexity.

Two publications [112], [113] expanded band-wide per-subcarrier PMI selection. Use IEEE 802.16e-2005 link-level simulations to test the recommended methods. One precoder per subcarrier band minimizes overhead. A easy and effective way to make sure that extended-real-time polling service (ertPS) gets the most out of its uplink resources in IEEE 802.16e broadband wireless networks. Based on the MAC request header numbers, the suggested algorithm dynamically gives uplink resources to subscriber stations whose base stations are getting more requests.

Four papers [114]–[117] talked about a code stacking method that uses Walsh code to double the data rate. How well the system reduces inter-symbol interference (ISI) IEEE 802.15.6 will determine how the amplitude modulated FSDT technique is used. This study showed two ways to cut down on ISI. Increasing the data rate made the signal-to-noise ratio 1 dB worse than with FSDT. The suggested interleaver design method doubles the amount of data that can be sent over 22 MIMO-OFDM communication systems that don’t use transmit diversity.

There are three main contributions of this research: The novel proposals are introduced first. Second, the suggested IEEE 802.11p approach is used to calculate CTTs for each street, and SUMO is used as a traffic simulator for dynamic route planning [118].

Two papers [119], [120] showed that the idea that these techniques hurt performance is not true. Using the better SVD method to use channel prediction showed that it almost worked as well as using a spatial filtering method with IEEE 802.11ac channel prediction. SVD was a good MIMO receiving method for channels that changed over time.

In another study [121], IEEE 802.15.4 standard focuses on understanding SDN benefits in wireless networking settings without infrastructure, like W-PANs, and requirements for SDN in W-PANs. SDWN, a full SDN solution, is explained, along with a prototype implementation.

In one study [122], adaptive interrupt coalescing (AIC) was suggested as a way to save energy and improve performance. The Intel 82579 NIC and the e1000e Linux device driver have been used to set up EEE with AIC at the source. The results of the experiments show that the energy efficiency of AIC is better in most cases, even when performance is taken into account. In the best case, it is up to 37% better than the energy efficiency of traditional interrupt coalescing methods.

In one paper [123], the statistical properties of 60 GHz channels in a staircase environment with the transmitter (Tx) side constant and the receiver (Rx) side moving are looked at. Specifically, the paper looks at how the moving receiver Rx changes the properties. IEEE 802.15.3c shows a single-input-multiple-output (SIMO) model that can be used in an office or meeting room. Simulation results show that the Euclidean distance between the Tx and Rx is linked to almost every channel property.

One article [124] showed that holonic and CPS models work well together on the IEC 61499 standard for production control automation. This study uses UML models and a protocol based on industrial Ethernet (Profinet) to meet the needs of a distributed CPS that works in real time.

Networks Standards Standard

A networking standard refers to a formally established document that has been created to outline the necessary technical requirements, specifications, and guidelines that must be consistently adhered to in order to ensure that networking devices, equipment, and software are suitable for their intended purpose. Standards play a crucial role in guaranteeing the attainment of high levels of quality, safety, and efficiency.

There are four studies [125]–[128] that propose an approach to AP selection that actively accounts for both types of mobility. Furthermore, demonstrate that the proposed method greatly outperforms the baseline method in terms of throughput. Because of the quick growth of WLANs based on the IEEE 802.11 standard, more and more public places like train stations and airports are offering WLAN services. This work introduces a new type of acknowledgement called TACK and the corresponding TCP extension, TCP-TACK. TACK’s goal is to reduce the number of ACKs sent, which is exactly what the transport protocol needs. Without modifying the hardware of IEEE 802.11, TCP-TACK can be used on top of commodity WLAN to provide high wireless transport goodput with little control overhead in the form of ACKs.

Two publications [129], [130] detailed preliminary work on conforming network entities and progress toward a building-sized test bed for this specification. Rowan University students and researchers will have a living smart sensor network for development and study after initial work. A prototype system monitors LV networks near-real-time. Explained how the first and second releases of this secondary substation automation equipment utilised IEC 61850 and CIM standards.

One paper [131] covered RFT-V and RFT-C specifications. It compares voltage, current, and power limits. Different deployment methods, cable pairs, distance served, etc. The publication concludes with safety measures for both standards, including UL/CSA/IEC6095021.

Another study [132] states that FRAND-committed SEP holders seek a higher royalty rate (holdup) and implementers want a lower rate (holdout). Court lawsuit 802.11 determines royalty rates if negotiations fail. SEP valuation must be calculated accurately. In this study, the top-down FRAND royalty rate is applied with the equivalent license strategy to supplement it.

NS2 implemented another paper’s [133] approach for wireless broadband network application. This new scheme evaluates ASVC on a more realistic simulation across wireless broadband networks using open-source software. MGS mode ASVC beats CGS mode IEEE 802 11n.

One paper [134] proposed ASBA to tackle IEEE 802.11 Wireless Mesh Network congestion. The ASBA uses collision states to alter Contention Widows. Improve Wireless Mesh Network contention performance and avoid delivery delays.

The performance of another paper [135] gateway is tried and looked at. Also, the program code has been optimized to improve its performance ISO 11898-1 after a gateway study. After optimization, the findings show that the performance of the proposed gateway has improved significantly.

Four studies [136]–[139] proposed a beam training algorithm with dynamic beam ordering that meets the strict latency criteria of the latest mmWave standard negotiations. simulation results show that different variations of the proposed scheme improve latency performance and received signal-to-noise ratio over the optimum beam training scheme based on exhaustive narrow beam search IEEE 802.11ay. driving data obtained from V2X driving status information and predicted by LSTM. The proposed technique accurately estimates maneuver via V2X communications and follows proven V2X standards IEEE802.11p.

De-Facto Standards

De facto standards are those that originate in the marketplace and are endorsed by a number of suppliers but lack formal status. For example, Microsoft Windows is a single company’s product that has not been formally recognized by any standards organization, while being a de facto standard. De facto standards in the communications industry frequently become formal standards after widespread acceptance.

Even though the internet protocol is the de-facto standard for internet connection, severely restricted devices with little processing power, working memory, and battery power capacity should be addressable via an IP-based network architecture. To meet this requirement, 6LoWPAN could be used to communicate with wireless devices. Wireless sensor networks are a hot issue these days, but a well-structured, wire-based infrastructure is sometimes preferable [140], [141].

According to one research [142], the technical advancement of wireless communication technologies, as well as the need for effectively modeling these increasingly complex systems, produces a continual increase in simulation model complexity. Simultaneously, multi-core systems have emerged as the de facto standard hardware platform.

Another study [143] on the HEVC standard, which is now the de facto video coding standard. The OpenHEVC open source software was changed in this study to improve interoperability by using OpenMP instead of POSIX threads. This paper proposes a novel approach to dealing with parallelization throughout the decoding process. This method outperforms the POSIX-based one by at least 10% while providing greater interoperability.

Five publications [144]–[148] offered a programmable implementation and continuation of the same project in which behavior diagrams, namely use case, activity, and state diagrams, were chosen for critical study and possible enhancements. The Unified Modeling Language (UML) has become the de-facto standard for the design and specification of object-oriented systems, although its semi-formal structure has certain drawbacks.

Two papers [149], [150] looked at how a standards-based pro-competition legal interoperability framework can be used to keep future Internet service marketplaces open, inventive, and competitive. The following is how the paper is organized. Explain the specific issues generated by a shift in favor of dominant de facto standards in ICT markets.

Another two publications [151], [152] FINEAS is a rate adaptation algorithm that enhances client QoE and fairness in multiclient contexts. It involves an in-network coordination system for equitable resource sharing. HTTP Adaptive Streaming (HAS) protocols have gained popularity and become the de facto standard for video streaming services.

Three articles [153]–[155] implemented a subsumption architecture utilizing Model Driven Development on a real-time physical platform. Robot Operating System, the current robotics standard, is compatible with the platform. This work experimentally implements a multi-layer subsumption-based autonomous robots control utilizing Model Driven Development. This paper proposed an alternate datatype generic algorithm for calculating the difference between any algebraic datatype’s values.

Four articles [156]–[159] used runtime profiling and dynamic code creation to circumvent these query compiling restrictions. Apache Spark is becoming the norm for modern data analytics in big-data systems. Spark optimizes analytical workloads on various data sources through SQL query compilation. JavaScript is becoming the mainstream language for general-purpose programs after dominating client-side web programming. Most engineering fields model complicated systems.

Four studies [160]–[163] about a cross-platform, open source, distributed version control tool that works well with non-linear development and can handle projects of all sizes quickly and effectively. It has become the de facto standard for version control in software creation and is used by millions of software developers every day. The decision to use Android SDK instead of Platys is based on two things. First of all, the Android SDK is the usual way to build location-aware apps for Android.

One paper [164] Hardware layers, libraries, kernels, and light-weight operating systems are often used to help with development. But these usually don’t help much or at all with automatic worst-case execution time (WCET) estimation, so manual methods based on testing and measuring remain the de facto standard.

Smart technology Standard

The acronym SMART stands for the various formats, processes, and tools that are essential for facilitating user interaction with standards, encompassing both human and technology-based users. The nineteen articles in this category where these digital solutions cater to the requirements of various stakeholders, including industry representatives, regulators, end users, and society as a whole.

Internet of Things (IoT) Standard

The IoT has challenges due to the lack of standards because it makes it challenging for various systems and devices to collaborate and communicate effectively. It is difficult to create a universal standard that functions across all IoT devices since they can employ a wide range of communication protocols and data formats and are produced by numerous different vendors.

This study [165] examines the signals obtained from discreet sensors integrated into a wheelchair, which possess convenient plug-and-play functionality and automatic identification capabilities. These signals are collected and initially processed at the platform level, and subsequently transmitted to a server application on a host PC using the IEEE802.15.4 wireless communication protocol.

According to [166] study, enabling full connectivity among these devices is a huge task. To address this, various communication protocols have been proposed. IEC 61850 has become the de facto standard for sophisticated communication networks in power systems due to its capacity to handle enormous volumes of data exchanges and object-oriented design.

Smart Grids Standard

Smart grid technology and the products and services that use it offer a lot of potential for businesses in all fields. The number of successful smart grid projects keeps going up, and each one is different in terms of its size and scope. Even though there isn’t a single meaning of "smart grid" yet:

Two articles [167], [168] showed how the proposed modeling idea could be built on the power utility interoperability approach IEC 61850 and the distributed automation reference model IEC 61499. A lot of attention has also been given to the ICT standards, such as IEC 61850, which combines ICT with MAS.