Draft 04: Impact Assessment Requirements in the GDPR vs the AI Act: Overlaps, Divergence, and Implications

working draft

✍ Tytti Rintamaki* , Delaram Golpayegani , Dave Lewis , Edoardo Celeste , Harshvardhan J. Pandit

Description: In this paper we analysed DPIA requirements from all 30 EU/EEA DPAs and found variance. We then aligned the DPIA required conditions with Annex III High-Risk cases in the AI Act and found that there is a large overlap between GDPR and AI Act.

under review (in-press) 🔓open-access archives: harshp.com , OSF

Abstract Under the EU General Data Protection Regulation (GDPR), the processing of personal data with new technologies (including Artificial Intelligence) requires conducting a Data Protection Impact Assessment (DPIA) to evaluate potential risks to the rights and freedoms of individuals. In addition to defining categories of processing that require a DPIA, the GDPR empowers Data Protection Authorities (DPAs) to define additional categories where a DPIA must be conducted. In 2024, the EU adopted the AI Act, which classifies artificial intelligence (AI) technologies according to their level of risk to fundamental rights, democracy, and society. It also requires conducting a Fundamental Rights Impact Assessment (FRIA). The compelling question thus emerges of how and where DPIAs are required and what their relationship is vis-a`-vis the risk assessment required by the AI Act. This paper first analyses DPIA requirements collected from all 27 EU member states and 3 EEA countries and then compares them with the 'high-risk' areas defined in the EU AI Act's Annex III. We show the overlaps, gaps, and divergence in EU Member States approaches regarding applying DPIAs to AI. We also discuss how such assessments require coherence and cooperation regarding information sharing across the AI value chain to identify and resolve risks and impacts efficiently. Our findings are significant for the implementation of the GDPR and AI Act and cooperation between their respective authorities. They also highlight the necessity to harmonise the application of DPIAs with the AI Act's high-risk areas.

GDPR, Regulatory Compliance, DPIA, AI Act, Fundamental Rights, AI

Introduction

the evolving landscape of artificial intelligence (AI), the interplay between technology and individual rights has become a focal point for all stakeholders, from policymakers and regulators to developers and users. As AI technologies are increasingly being integrated into our lives, ensuring that these systems align with fundamental rights and freedoms has become urgent. In the European context, the General Data Protection Regulation (GDPR)[1] and the final published version of the AI Act in 2024 [2] are two regulatory frameworks seeking to protect the rights and freedoms of individuals[3]. The GDPR emphasises the protection of personal data and privacy, and the AI Act, published recently in 2024, represents the first law in the world to comprehensively regulate AI.

The European regulatory landscape for digital technologies is elaborate, with a significant overlap between the AI Act, GDPR, Data Governance Act, and Digital Services Act[4]. These frameworks protect fundamental rights and promote responsible digital practices through varying scopes. To encourage the reuse of data and enable data altruism, the Data Governance Act establishes frameworks that facilitate the sharing of data while requiring the assessment of risks and impacts of their intended use. The Digital Services Act focuses on online platforms and digital services, touching on issues raised in GDPR, such as explanations of algorithmics and transparency. Research has investigated areas of overlap and conflict between these regulations, specifically focusing on how to balance and comply with al [ref]l. Regarding the overlap between GDPR and AI, the GDPR addresses AI through its provisions on automated decision-making, though it only prescribes a few requirements, such as providing opt-outs and requiring human intervention in specific cases.

Impact assessments are instruments that regulations utilise to foster responsible innovation while tackling growing concerns such as fairness, harm, and upholding rights and freedoms. GDPR Article 35 requires Data Protection Impact Assessments (DPIA) to be conducted for processing activities likely to result in a high risk to the rights and freedoms of natural persons. Similarly, the AI Act Article 27 requires conducting Fundamental Rights Impact Assessments (FRIA) for AI systems that are classified as ‘high-risk’ under Article 6 and Annex III. From this, it is evident that both GDPR and the AI Act provide a framework for categorising activities as "high-risk" and have a requirement to assess their impact on rights and freedoms via DPIA and FRIA as instruments.

Even if the identification and categorisation of high-risk activities are central to both regulations, the GDPR and the AI Act have different scopes and authorities enforcing them, which increases the complexity of compliance for entities subject to both. Furthermore, GDPR empowers member states and EEA countries to produce their own list of processing activities that require conducting a DPIA. Cases where AI systems utilise personal data will thus be regulated under both the GDPR and the AI Act, and their categorisation as ’high-risk’ will depend on both regulations as well as the countries involved. Despite the increasingly large use of AI applications involving people and thus personal data, the AI Act does not dictate how its obligations should be interpreted alongside those from the GDPR, nor does it provide a mechanism for cooperation among their respective authorities. If left unexplored, this overlap between the GDPR and the AI Act will lead to regulatory uncertainties, delays in enforcement, and create economic barriers to innovation. Furthermore, despite six years of GDPR enforcement, there have been no analyses of the variance in DPIA requirements across the EU, which means the implementation of the AI Act is certain to be fragmented as entities scramble to understand how the use of AI is classified as ’high-risk’ under each country’s GDPR requirements. The study of overlap in high-risk classifications across both GDPR and the AI Act is therefore urgently required.

To address this important yet under explored overlap between the GDPR

and the AI Act, we investigate the intersections in the categorisation

of high-risk technologies across the GDPR and the AI Act, as well as the

implications of potential overlaps and divergences. To achieve this, we

have the following research objectives:

RO1: Identify the key concepts that determine high-risk

processing activities in the GDPR and each Data Protection Authority’s

DPIA required lists (Section 3);

RO2: Analyse high-risk AI systems in the AI Act Annex

III to identify the potential applicability of the GDPR DPIA based on

identified key concepts in RO1 (Section 4.1);

RO3: Compare high-risk categorisations in the GDPR and

the AI Act to identify overlaps, gaps, and variance (Section 4.2);

RO4: Assess the implications of the findings in RO3 on

the AI value chain.

Our paper makes four novel contributions: (1) We provide a landscape analysis of DPIA-required conditions across GDPR, EDPB guidelines, and all 27 EU and 3 EEA countries; (2) We provide a framework to simplify high-risk categorisations under the GDPR and the AI Act based on key concepts; (3) We identify which of AI Act’s Annex III high-risk categorisations are subject to GDPR’s rules and when vice versa they are or can be categorised as high-risk under the GDPR; and (4) We describe how the obligations for conducting DPIA under the GDPR, and risk assessment and FRIA under the AI Act necessitate sharing of information across the AI value chain - thereby demonstrating how the impact of AI Act reaches upstream stakeholders i.e. those early in its development.

State of the art

GDPR

Both the GDPR and the AI Act take risk-based approaches to protect rights and freedoms[5], [6]. In the GDPR, the assessment of the level of risk entails a binary classification (Art. 35): high-risk and non-high-risk data processing as the only two categorisations. Within GDPR, the DPIA is an ex-ante or “preventative assessment” [7] and an obligation for data controllers to conduct when the processing of personal data is anticipated to pose risks to the rights and freedoms of individuals. Art. 35 GDPR details the processing activities that require conducting such an assessment. Art. 35(1) refers to processing using “new technologies”, a technology-neutral and future-proof definition which today includes AI technologies. This provision states that a DPIA is required in three circumstances. Firstly, when data processing involves automation for systematically and extensively evaluating natural persons, it leads to legal effects. Secondly, in case of large-scale processing of special categories (Art. 9) or criminal convictions (Art. 10). Thirdly, in case of large-scale systematic monitoring of a publicly accessible area. In addition to these, the European Data Protection Board (EDPB) provides guidance for DPIAs using these criteria to identify nine conditions where a DPIA is required under GDPR [8].

While the GDPR is a pan-EU regulation that needs to be consistently applied, the independence of Member States and their Data Protection Authorities (DPAs) introduces variances in its implementation. In regards to conducting Data Protection Impact Assessments, Art.35(4) authorises Member State Data Protection Authorities to compile lists of the processing activities that require a DPIA in their jurisdiction, leading to variance in the states. Prior work has demonstrated this variance in the various DPAs’ guidelines on the information to be maintained in records of processing activities (ROPA) templates, which are necessary under Art. 30 GDPR [9]. This variance has an impact on the organisation’s compliance activities, especially when an entity is present across member states or when data subjects from multiple member states are involved. To date, no work has similarly explored the variance in DPIA requirements, i.e., what is considered high-risk across the countries that implement the GDPR.

Despite the DPIA being an important obligation in terms of its intent to protect rights and freedoms, there has been little exploration in the state of the art to build support systems that can enable an actor to identify when their activities are high-risk under the GDPR and require a DPIA, or what kinds of risks and impacts can be foreseen. Authoritative efforts such as CNIL’s (the French DPA) Privacy Impact Assessment (PIA) software [10] that can create a knowledge base of shared risks to be used in a DPIA, or the ISO/IEC 29134:2023 guidelines for privacy impact assessments [11], do not explore the underlying question of what determines what is high-risk, which necessitates interpreting textual descriptions in laws on a case by case basis until suitable guidelines and case law are established. Therefore, based on prior similar work exploring the AI Act high-risk categorisations [12], it is necessary to create a framework to identify the ‘key concepts’ that can simplify the determination of high-risk categorisation under the GDPR (RO1) with a view towards its use in automation and support tools [13].

EU AI Act

The AI Act adds a third category to the assessment of the level of risk mentioned earlier for GDPR, specifically for unacceptable AI uses, which are prohibited ipso facto (Art. 5)[6]. The AI Act defines risk as ‘ ’the combination of the probability of an occurrence of harm and the severity of that harm’ (Art. 3). The specific criteria for which applications are considered high-risk are defined in Annex I, which lists sectoral laws, and Annex III, which describes specific use-cases. For this paper, we focus on the high-risk systems listed in Annex III and exclude prohibited AI systems as they underwent significant revisions in the last legislative update and Annex I, as these would require the interpretation of other sectoral laws. [14].

Despite the importance of Annex III in determining high-risk status under the AI Act, there has been little analysis regarding its contents. Work by Golpayegani et al.[12] explored the clauses in Annex III and identified five key concepts whose combinations can be used to simplify the identification of high-risk applications. These five concepts represent (1) Domain or Sector, e.g. Education; (2) Purpose, e.g. assessing exams; (3) Capability or involved AI technique, e.g. information retrieval; (4) AI Deployer, e.g. school/University; and (5) AI Subject, e.g. students. This framework of 5 concepts is useful to understand which information is required to assess whether an application is high-risk as per the Annex III clauses. The authors also provide taxonomies for each of the five concepts to support expressing practical use cases and the creation of automated tooling to support risk assessments. We followed this approach and the concepts with our DPIA analysis (RO1) to compare the GDPR and the AI Act requirements regarding high-risk categorisation (RO2) and identified when the GDPR is applicable for Annex III clauses (RO3).

Other Assessments

Calvi and Kotzinos [15] discuss how assessments are required during different stages of a product lifecycle across the regulations: GDPR’s DPIA before personal data processing, AI Act’s conformity assessment before introducing the AI system to the market, the Digital Services Act’s risk assessments before deployment and the Fundamental Rights Impact Assessment before putting the system into use. They highlight areas of concern we are also looking at, namely how the different assessments address different risks, involve different entities and types of data, assign responsibility for the assessment differently and conduct them at different stages. We will discuss how this is further complicated by the nature of the AI lifecycle in Section 5, but we agree with the authors as they propose coordination to improve future policy choices. We believe that this could take the form of information sharing amongst the various assessments and entities to assist upstream and downstream.

Some European countries have created frameworks and guidelines for trustworthy and responsible AI systems that build on the DPIA framework outlined in GDPR in an AI-specific context. For example, CNIL (French GDPR authority) released guidance on how to ensure the use of AI systems is compliant with the GDPR [16] with an emphasis on the development stage early in the life cycle - an area not covered by the AI Act itself. Their guides bridge the gap between the different regulatory frameworks, assisting in multiple aspects of the applicable legal regime. Regarding GDPR, they assist in defining the purpose(s) of the system, controllers and processors, ensure the lawfulness of processing, encourage privacy by design and further assist in conducting a DPIA amongst other things. Interestingly, CNIL discusses the risk criteria in the EU AI Act and takes the stance that all the high-risk systems listed in the AI Act will have to conduct a DPIA when personal data is involved. Our findings detailed in this paper show that the connection between the high-risk systems and guidance on when to carry out a DPIA is much more complicated, and there exists a difficulty in interpreting the conditions/criteria. We will explain the reasons why.

For entities carrying out these assessments, information sharing and coordination between them is necessary to have the required information for each assessment. For example, the Dutch government’s 2022 report [17] shows how the assessment of the impact on fundamental rights and freedoms can be based on the GDPR’s DPIA. They utilised the process of identifying the objectives of data processing from DPIAs, but as the focus is on personal data, they concluded that much more needs to be added to account for the various elements involved in using algorithms. We utilised this argument about the usefulness of DPIA as a baseline for (RO4) to explore the implications of DPIA requirements on the AI Act across different stages of the AI development lifecycle to reduce the burden for each entity in conducting their assessments and to ensure the appropriate enforcement of regulations.

Analysis of DPIA criteria

Methodology

To identify the conditions where a DPIA is necessary, we gathered all the processing activities listed in legal texts, guidelines and member state authorities’ DPIA required lists. This means we utilised the criteria defined in GDPR Art.35(3), the lists of processing activities requiring a DPIA published by DPAs from all 27 EU and 3 EEA member states implementing the GDPR [16], [18]–[51]. In consolidating these, we differentiated between pan-EU legally binding requirements (mentioned in the GDPR or by the EDPB[8]) and those limited to specific countries through their respective DPIA lists. Where guidelines were not present in English, we translated the documents using the eTranslation service provided by the European Commission [52]. For each DPIA required condition, we expressed it as a set of ’key concepts’ (further described in Section 3.2) based on prior work applying similar techniques to GDPR’s Record of Processing Activities (ROPA) [9] and the AI Act’s Annex III cases [2].

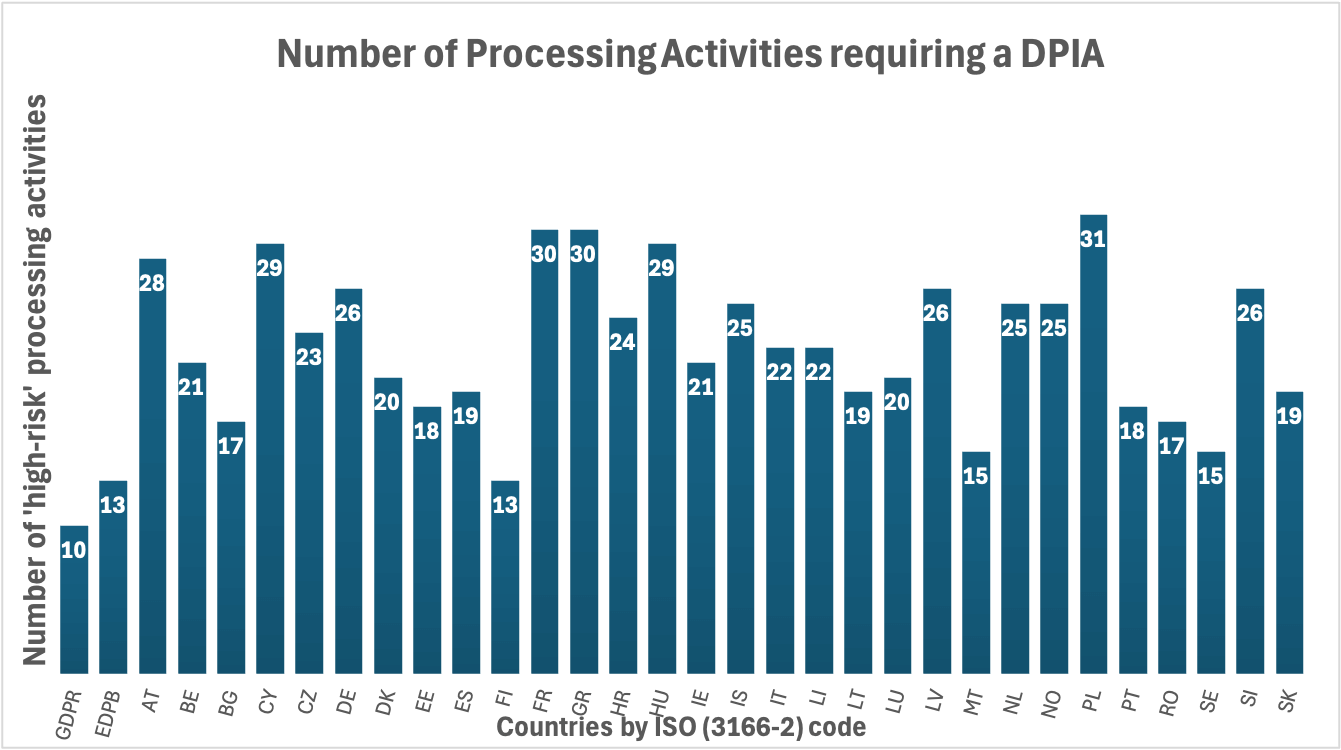

Through this exercise, we compiled a list of 94 distinct activities that represent all DPIA-required conditions from the GDPR, EDPB, and member states’ guidelines. This list can be found in the Appendix. A summarised representation of the variance in the amount of DPIA required conditions across the mentioned sources is shown in Figure. 1. Each member state is referred to by its ISO 3166-2) code, for example, ’AT’ for Austria or ’FR’ for France. GDPR and EDPB are noted first, followed by the member states in alphabetical order. This allows for a visual comparison of how many DPIA-required activities the data protection authorities in each country have defined in addition to those in GDPR and the EDPB guidelines. The number at the top of each bar indicates the total amount of processing activities listed by the specific authority, for example, 30 activities listed by the Greek Data Protection Authority or 13 activities listed by the guidelines published by the EDPB.

Poland had the most conditions (n=31), followed by Cyprus, France and Greece (N=30). The least conditions were found in Sweden and Malta (n=15). The average number of activities was around 22, a stark increase from the 10 listed in GDPR, or the 13 in EDPB. Of note, the bulk of DPIA-required conditions in our list comes from country-specific lists (82 of 94). In these, stable activities include (large-scale) processing of communication and location data (23 countries), (large-scale) processing of employee activities (19 countries), and processing with legal effects such as access to or exclusion of services (12 countries). More specific activities such as large-scale processing of financial data (mentioned by five member states), or electronic monitoring at a school (mentioned by two member states) are much more exact types of processing activities that have been chosen by the authorities. An interesting finding is that the use of any AI in processing requires a DPIA in Austria, Denmark, Germany, Greece, and the Czech Republic.

Key concepts for determining high-risk status

To express these 94 conditions for high-risk processing activities in a manner that could facilitate comparisons, we identified their key concepts so that providing these would be sufficient to identify whether the activity is high-risk according to the collected conditions. The concepts we identified in this manner were: (1) Purpose of processing activity; (2) Personal Data - especially whether it is sensitive or special category, e.g. health data as per Art. 9 GDPR or criminal convictions and offences as per Art.10 GDPR; (3) Data Subjects - especially whether they are vulnerable, e.g. children, minorities; (4) involvement of specific Technologies - especially whether they are innovative and untested, e.g. AI; and (5) Processing Context - such as involvement of automated decision making, profiling, and whether the processing produces legal effects on the data subject. Not all key concepts were required to express each high-risk condition. For example, Art.35(3b) GDPR large-scale processing of special categories can be expressed as the combination of two concepts: processing context (large scale) and personal data (special categories)

Comparing High-Risk categorisation between DPIA and Annex III

AI systems that pose a high risk will have to conduct the Fundamental Rights Impact Assessment (FRIA) as per AI Act Art.27. We first expand on the interplay between the DPIA and the FRIA and identify similarities through which our DPIA analysis can also support the FRIA processes.

Interplay between DPIA and FRIA

The DPIA and FRIA are required to be ex-ante activities and need to be performed before the deployment of an AI system. Additionally, the DPIA may also be required in the early stages or upstream within the AI development lifecycle, as mentioned in Section 2.2. Given the similarities between FRIA and DPIA [53], one of the critical questions in conducting FRIA revolves around its integration with existing impact assessments, in particular DPIA. In fact, the AI Act Art.27(4) explicitly acknowledges the reuse of DPIA as a ’complement’ to the FRIA obligations. We identified what information is required for both, and aligned it to understand the extent to which existing DPIA information can be utilised in the FRIA. The lack of clarity of AI Act Art.27(1a) regarding ”a description of the deployer’s processes in which the high-risk AI system will be used in line with its intended purpose” regarding what information is required in such a ”description” was resolved by using existing interpretations of the concept of ”systematic description” under Art.35(7a) GDPR. Existing GDPR guidance states that this information involves the nature (e.g. sensitivity of data), scope (e.g. the scale of data and data subjects, frequency, and duration), and context (e.g. retention periods, security measures, and involvement of novel technologies) [8] of processing activities. We posit that this same information would also be necessary for a FRIA. Based on this presumption, our analysis of DPIA requirements in Annex III cases should also be useful in identifying the information required for FRIA.

Interaction between high-risk concepts (DPIA and Annex III)

Before analysing whether a DPIA is required, the activity must first be subject to the GDPR, i.e. it must involve personal data. Only after ascertaining this can we ask the question ” When do high-risk AI systems involving risky (personal) data processing require a DPIA?”. To do this, we utilised the key concepts in Section 3.2 in the following manner: (1) purpose of the AI system ; (2) involvement of personal data - especially whether it is sensitive or special category; (3) the subject of the AI system - especially whether they are vulnerable; (4) possible involvement of specific technologies that trigger a DPIA - such as use of smart meters in Annex III-2a and (5) processing context - such as involvement of automated decision making, profiling, and producing legal effects. In addition to this, we also had to codify certain exemptions mentioned in Annex III, such as III-5b, where the purpose is to detect financial fraud, or III-7a, for verification of travel documents. The output from this activity can be found in the Appendix. This allowed us to understand which of the GDPR’s DPIA required conditions could apply to each of the AI Act Annex III clauses.

Applicability of GDPR and DPIA in high-risk AI systems

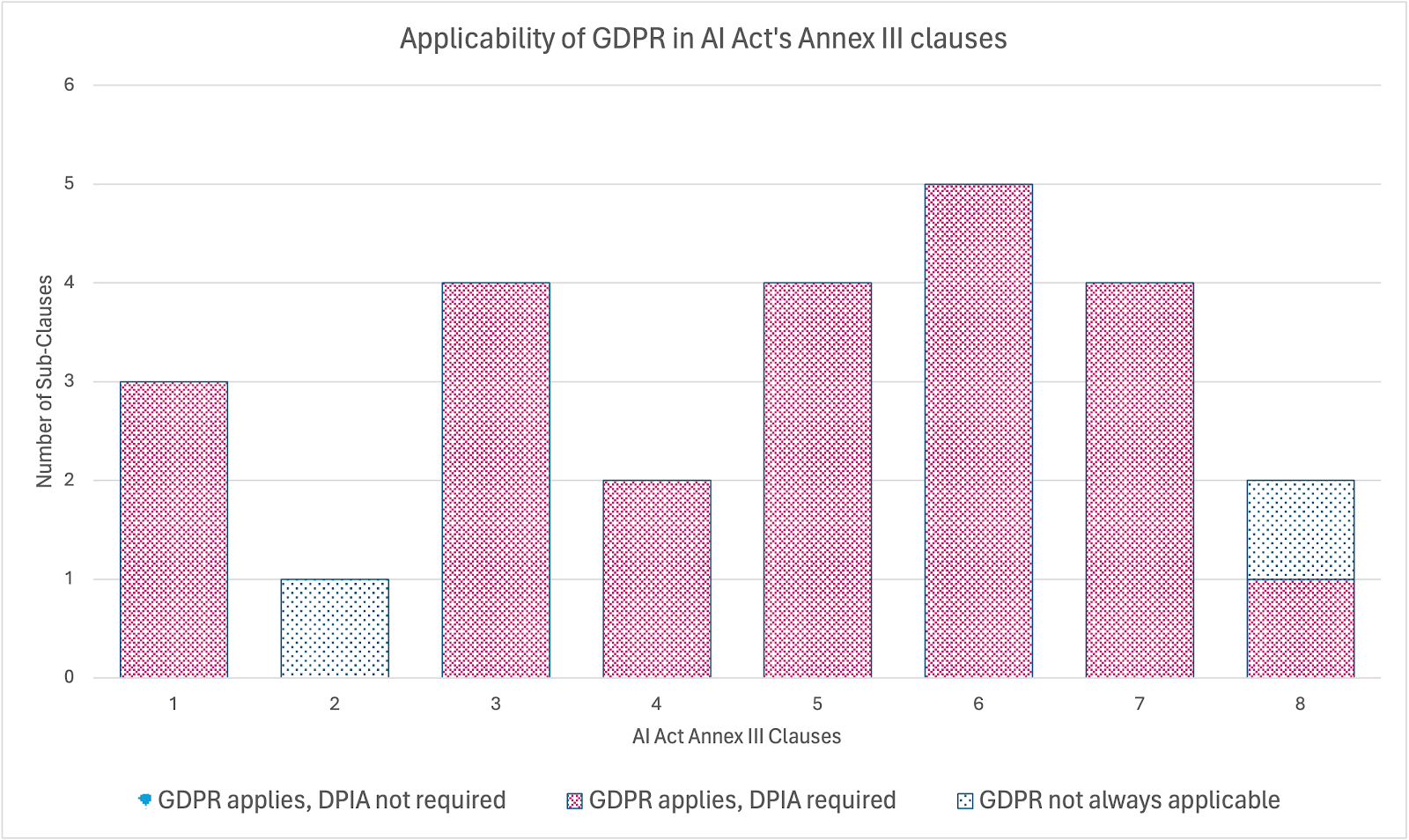

Expanding on the above concepts that allowed us to express the high-risk conditions, this section will provide detailed examples and explanations of how these concepts interacted. First, we looked at information within the 25 high-risk descriptions (8 clauses and their subclauses) listed in Annex III and assessed whether they involved personal data explicitly (i.e. it can be reasonably inferred from the description) or conditionally (i.e. it may be potentially involved in a particular application) to assess the applicability of GDPR to Annex III clauses.

Similarly, we identified whether each of the key concepts is explicitly or conditionally applicable in each of the Annex III clauses. If a conditional applicability was identified, we added an identifier to distinguish the conditional clause from the main or explicit clause present in Annex III to identify which clauses in Annex III will always be subject to the GDPR and which may conditionally be. Finally, we identified if the combination of the key concepts matched any of the DPIA required conditions identified from the analysis in Section 3. In this, we distinguished whether each condition came from the GDPR (i.e. GDPR Art.35 or EDPB) or a specific member state list - through which we determined whether the applicability of a DPIA required condition was uniform across the EU (i.e. it came from GDPR) or there was a variance (i.e. it is only present in one or more countries).

From this exercise, we found personal data was explicitly involved, and hence GDPR is applicable in 23 out of the 25 Annex III clauses, as seen in Figure 2. The only ones where GDPR is not always applicable are Annex III clause 2a and clause 8a, though GDPR may be conditionally applicable based on the involvement of personal data in a particular use case. The two clauses that do not explicitly involve personal data include Clause 2a concerning critical infrastructure AI systems used as safety components in the management and operation of critical digital infrastructure, road traffic, and the supply of water, gas, heating and electricity. The type of data present in safety components of infrastructure does not present a clear involvement of personal data. Similarly in Clause 8a concerning the administration of justice and democratic processes, specifically AI systems intended to assist in researching and interpreting facts and the law and in applying the law to a concrete set of facts. Here, too, the explicit involvement of personal data is absent.

However, we did identify use cases that fall under these two clauses, which involve personal data and require conducting a DPIA by specific member state data protection authorities. An example use case for Clause 2a is the use of smart meters in homes to measure the consumption of water, electricity and gas. The use of such technology requires a DPIA in Hungary, Poland and Romania. Another example use case for Clause 8a is if, in the process of researching and interpreting facts and the law, the system utilises data about criminal offences, which is a special category of personal data and requires conducting a DPIA by GDPR.

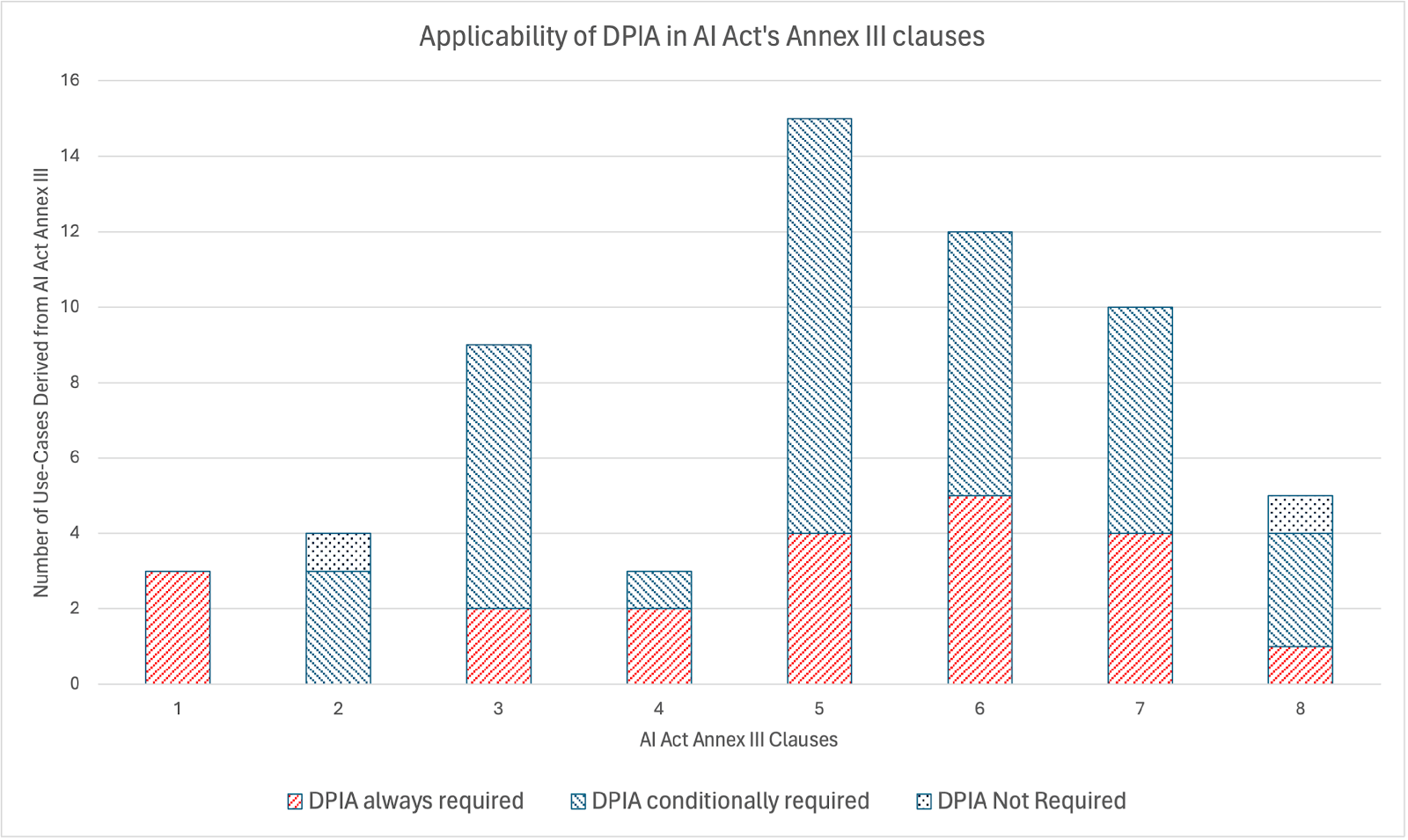

To distinguish such conditional applicabilities, we created additional identifiers by adding numeric suffixes to the Annex III clause identifier to uniquely identify each variation. For example, in Annex III-2a, which is related to critical infrastructure, we identified three variations based on the involvement of III-2a.1 smart meters, III-2a.2 road traffic video analysis, and III-2a.3 public transport monitoring systems. Each such variation involves the conditional applicability of a concept (e.g. technology for III-2a) and allows us to match the Annex III clause to a DPIA-required condition, e.g. smart meters in III-2a.1 match with DPIA-required conditions from Hungary, Poland, and Romania. We found 36 such additional use cases based on the conditional applicability of key concepts from the DPIA required conditions. In sum there are a total of 61 (sub)clauses and use cases, for which a DPIA is always required in 21 use cases, is conditionally required in 38 and is not required for 2, as seen in Figure 3.

Comparison and Findings

After identifying where high-risk processing activities and personal data is involved in the 25 clauses and subclauses in Annex III and its 36 conditional use cases, we compared the resulting information with each of the 94 conditions for when a DPIA is required. The output of this process was an identification of how many clauses in Annex III require a DPIA always or conditionally, and whether the conditions come from the GDPR or are specific to a member state. A summary of the findings can be found in Figures 2 and 3. Within the table (which can be found in the Appendix), the first set of columns represents the clauses in Annex III and the number of sub-clauses within each clause. The column ’Additional Use-Cases’ represents the number of possible variations we identified for that earlier are included here. The column ’GDPR applies’ represents the number of subclauses and variations within which GDPR is always applicable based on the involvement of personal data. It shows that GDPR is always applicable in 23 of 25 Annex III subclauses and in all of the 36 potential variations, with the exceptions being III-2a and III-8a. The column ’DPIA always required’ represents the number of cases where the GDPR is applicable and a DPIA is required based on the conditions from the GDPR (and EDPB), with the column ’DPIA conditionally required’ listing cases where a DPIA is conditionally required based on the identified variations of key concepts.

For example, Annex III-3b regarding evaluating learning outcomes does not match any of the DPIA required conditions stated in GDPR, but if it involves the use of vulnerable data subjects data, in this case, minors in the educational setting, then it will require a DPIA as it is a special category of personal data under GDPR. This is denoted as variation III-3b.a. Another example is variation III-5b.4. within which financial data is used to establish or assess the creditworthiness of individuals, a type of personal data processing that requires conducting a DPIA by Cyprus, Czech Republic, Estonia, Netherlands and Poland.

This table also contains a column ’Member State DPIA’, which represents the country code for a member state if processing activities listed by their data protection authorities were identified to be involved in the clauses. Another column ‘Required for (sub)Clauses’ represents how many countries have a DPIA-required condition that is applicable to one or more of the subclauses within that clause, and the column ’Required for Use-Cases’ represents countries that have a DPIA-required condition that is applicable to one or more of the additional use-cases identified for that clause. For example, for Annex III-4 there are 6 countries whose DPIA required conditions are applicable to one or more of its subclauses (e.g. 4(a) regarding recruitment and selection in employment, France requires a DPIA due to processing relating to recruitment and Austria, Czech Republic Denmark, Germany and Greece due to the involvement of AI in processing), and there are 17 additional countries (total 23) whose DPIA required conditions are applicable to one or more of the additional use-cases derived from the subclauses (e.g. 4b.1. concerning work-related relationships and contracts, including monitoring and evaluating that requires a DPIA by Austria, Belgium, Denmark, Croatia, Cyprus, Czech Republic, Estonia, Germany, Greece, Hungary, Iceland, Latvia, Lichtenstein, Lithuania, Luxembourg, Malta, Netherlands, Norway, Slovakia, Italy, Slovenia, Spain, and Sweden, due to involvement of monitoring of employment activities and use of AI). The remaining countries (e.g. 7 of 30 for Annex III-4) had DPIA-required lists that did not have any condition matching the subclauses or their variations.

Annex III clauses where DPIA is required

We found that conducting a DPIA was explicitly required in all 23 subclauses in Annex III where GDPR is explicitly applicable, and out of the two subclasses, both GDPR and DPIA may be potentially applicable based on the additional use cases representing variations of the subclasses.

10 out of the 25 subclauses in Annex III explicitly require a DPIA and 15 conditionally require a DPIA based on DPIA required conditions defined in the GDPR for involvement of special categories of personal data. Biometric data, which is relevant to assess AI Act prohibited practices such as those entailing facial recognition (Art.5(1e)), is involved in 3 subclauses explicitly (III-1a-c) and once conditionally (III-7d.1) for detecting, recognising or identifying natural persons in the context of migration, asylum and border control management. Health data is involved explicitly in one clause (III-5a) and six times conditionally (III-5c.2, III-5d.3, III-6b.1, III-7a.1, III-7b.1, and III-7c.3). Data on criminal offences is the third type of special category data involved in 2 subclauses explicitly (III-6a and III-6e) and 4 times conditionally (III-6a.3., III-6d.1b, III-6d.2 and III-8a.2) predominantly in the sector of law enforcement and once in the area of administration of justice and democracy.

16 out of the 25 subclauses in Annex III produce legal effects for the data subjects, and the 9 remaining may produce legal effects based on the additional use cases. For example, Annex III clause 5(b) evaluates the creditworthiness of natural persons which is a legal effect based on the use of credit scores to determine access to services or benefits, while Annex III clause 7(c) regarding systems used for the examination of applications for asylum, visa and residence permits will produce legal effects as this will lead to decisions on the eligibility of individuals applying for the status and permits.

We also found that sectors explicitly mentioned in Annex III clauses, such as education, employment and law enforcement, correspond to those mentioned in the GDPR’s DPIA required conditions published by most member states. This implies a necessity to further investigate whether these sectors can themselves be treated as being high-risk, which would make them a priority area for enforcement cooperation between GDPR and AI Act authorities.

Annex III clauses where DPIA may be required

In the case of Annex III 8(b), which involves AI systems used to influence the outcome of an election, referendum, or voting behaviour, a DPIA may be required based on whether special categories such as political opinions and religious or philosophical beliefs are involved. Processing of Art.9 GDPR political opinions explicitly requires a DPIA in 7 countries: Poland, Romania, Cyprus, Czech Republic, Finland, Italy, and Latvia. Poland specifically requires a DPIA where party affiliation and/or electoral preferences are involved. Belgium and the Netherlands, while not explicitly mentioning elections or voting, do require a DPIA when influencing the behaviour of natural persons which we presume takes place when influencing their voting behaviour.

Another example of subclauses where DPIA may be required is if the subject of the system belongs to a vulnerable group. This condition emerged in 8 use cases where this type of data may be involved (III-3a.2, III-3b.1, III-3c.1, III-3d.1, III-5b.3, III-5c.1, III-5d.2, III-6a.2). The vulnerable groups in these use cases are minors, appearing mostly in the educational and private and public service sectors. Processing data of vulnerable persons is explicitly mentioned by 20 member states1 and minors are explicitly stated by Austria, Ireland, Italy, Latvia, and Malta. In multiple use cases above, these minors are students as they are in the educational sector, and processing their data for the purpose of assessment requires a DPIA in Hungary and the Netherlands. This specific group of vulnerable data subjects is addressed by most member states (n=20) in various ways, taking into account the sectors where minor data is frequently processed. However, ten member states remain without explicit mention of this particular group of vulnerable individuals.

As mentioned earlier, a number of the use cases emerged to require a DPIA if they utilise either health data (III-5c.2. III-5d.3, III-6b.1, III-7a.1, III-7b.1, III-7c.3) or data related to criminal offences (III-6a.3, III-6d.1b, III-6d.2, III-8a.2). Health data is only explicitly mentioned in the processing lists of the Netherlands and Poland. Health data is listed as a special category of personal data (Art.9); therefore, processing such data does require a DPIA according to GDPR, but authorities have not explicitly listed it. Another type of personal data that may appear in 4 use cases is criminal offence data which is listed by 13 states2 and by GDPR (Art.10). Both these types of personal data require a DPIA by GDPR, however, it is worth noting that there is a disparity between how many member states make explicit note of this. The lack of clear information contributes to a difficulty in interpreting what does or does not require an assessment leading to variance in DPIA implementation and protections for fundamental rights and freedoms.

Effect of Variance in DPIA required conditions across Member States

In addition to the GDPR’s DPIA-required conditions, the 30 EU/EEA member states have added 82 distinct jurisdiction-specific requirements. This means that whether an activity should be considered high-risk depends not only on the GDPR - which is a pan-EU regulation- but also on the member states involved (i.e. the organisation’s location and involved data subjects). Such variances risk leading to differences in the levels of data protection for data subjects in the EU/EEA, and complexities for organisations operating in specific jurisdictions. As a result, organisations face the burden of having to navigate and comply with the various requirements, which may lead to inconsistencies in applications and risk assessments. The ambiguity of which conditions to consider regarding DPIA can lead to inconsistent assessments and contribute to a lack of consistency in DPIAs.

An example of the differences across member states is illustrated by the DPIA-required conditions by the data protection authorities of Hungary, Poland, and Romania, which included smart meters used to measure (domestic) consumption in their guidelines. The authorities from these countries express their concerns regarding smart meters generating large-scale amounts of data by real-time sensors that convey it across the internet or other means. If using AI, this qualifies as high-risk as per AI Act Annex III 2(a) regarding the supply of water, gas, heating and electricity. Other similar activities that also qualify under this clause are road traffic management in Austria and Poland, the use of tachographs in France, and the monitoring of road behaviour in Cyprus and Slovenia. This provides a clear indication to entities operating these types of AI systems within these jurisdictions that conducting a DPIA is definitely required, but for other jurisdictions, the requirement to conduct a DPIA in this circumstance is not clear and is left up to their interpretation of the GDPR and relevant guidelines.

A second example is Annex III-3b regarding monitoring and detecting prohibited behaviour of students during tests, for which 12 member states require a DPIA: Belgium, Aus- tria, Czech Republic, Lithuania, Greece, Germany, Spain, Iceland, Norway, Latvia, Netherlands, Denmark for monitoring of schools, individuals in general or monitoring the behaviour of individuals. For all of Annex III-3(a-d) the data processed may include data from students who are minors, and we expanded on this by including a use case for each that takes this into account. For these use cases, we found that 10 member states do not explicitly mention minors or vulnerable individuals/groups in their criteria for requiring a DPIA. The lack of explicit DPIA requirements for processing minors’ data increases the risk of harm to this group of vulnerable individuals and creates inconsistent protection standards.

A third example is Annex III-5c regarding risk assessment and pricing for life and health insurance, for which a DPIA is required in 5 countries: France, Greece, Italy, Poland, and Slovenia. Lastly, (only) France requires a DPIA for facilitating recruitment in employment which also qualifies as high-risk under Annex III-4a. Of interest, 5 member states specifically require conducting a DPIA when AI is used in personal data processing: Austria, Czech Republic, Denmark, Germany and Greece, meaning a DPIA is required for any Annex III use case taking place in these countries.

Furthermore, the interpretation of DPIA requirements in Annex III raises concerns, as the requirement for a DPIA is highly contingent upon conditional use cases. Specifically, this especially concerns clauses 2(a) critical infrastructure, 3(b) AI systems intended to be used to evaluate learning outcomes and 3(d) AI systems intended to be used for monitoring and detecting prohibited behaviour of students during tests. While some GDPR authorities have provided clarity on processing activities related to road safety and traffic management, the interpretation of safety components within critical digital infrastructure remains open to debate. Similarly, the classification of AI systems intended for educational purposes, such as monitoring student behaviour during tests, lacks consistent interpretations across jurisdictions. We found that if the data involved is of students that are underage, aka minors, a DPIA is required but otherwise requiring an assessment for the the evaluation and monitoring of the students varies by member states. Therefore, we highlight the urgent necessity for further guidance from authorities in interpreting whether a DPIA is required by the entities responsible for the AI systems in Annex III.

Findings and Implications of Information Sharing for Risk Assessments

We have demonstrated that variance exists in interpreting high-risk concepts between GDPR and the AI Act, with different guidelines between data protection authorities and multiple assessments to be conducted for entities operating AI and using personal data. The divergence for whether a processing activity or AI system is considered high-risk will complicate the implications of these inconsistent interpretations, thus creating a regulatory environment that impacts organisations’ compliance efforts, operational costs, and innovation and makes it difficult to prevent and mitigate the harms arising from the use of AI. We have outlined specific complications arising from varying interpretations of whether activities are high-risk and, therefore, require an impact assessment under the GDPR and the AI Act. The common expected consequences from these are diverging high-risk classifications amongst actors, overlapping regulatory requirements, increased compliance costs and inconsistent enforcement across jurisdictions.

The AI value chain consists of all actors, lifecycle stages, and processes involved in the planning, designing, development, deployment, and use of AI. While the AI Act predominantly only considers the actors at the end of the value chain where AI is deployed, other entities will also feel the effect of the AI Act as requirements such as risk management permeate to earlier stages of development in the form of product requirements and market innovation. In this section, we discuss how entities across the AI value chain will be responsible for carrying out the assessments, whether in the form of DPIA or FRIA, and how information sharing across the AI development lifecycle can provide a way to reduce the costs, decision-making, resource allocation, and risk management required.

When information is shared across the AI development lifecycle, the time and resources taken to produce the various assessments is decreased, saving costs. This also has the positive effect of allowing other entities to use the information, limiting duplication of work and effort, and reducing mistakes with multiple eyes on the same information, therefore reducing the resources needed to meet the compliance requirement. The availability of such information can improve the speed of decision-making, as the information is centralised rather than needing to gather and validate information from multiple sources, simultaneously increasing collaboration across departments. On a larger scale, it can lead to alignment with the national authorities responsible for issuing guidance to those operating in their jurisdiction and unburden data-driven models.

Information sharing can also play a crucial role in promoting responsible AI practices through enhancing ethical principles within organisations. Transparency is achieved when entities such as stakeholders have access to information, regardless of their position in the AI lifecycle. Accountability is strengthened through the availability of information, detailing decisions through which entities can be held accountable. The promotion of fairness and non-discrimination can be assisted through information sharing, as documenting the type of data and highlighting the potential risks involved can help inform downstream actors to identify and mitigate biases. In sum, this fosters informed risk assessments and inclusive decision-making by leveraging the collaborative involvement of different internal and external entities.

In terms of when existing impact assessments are to be conducted along the lifecycle of data, GDPR focuses on regulating the processing of personal data, while the AI Act seeks to regulate conformant products to be placed on the European Single Market under a common CE mark that offers assurances for protecting health, safety and fundamental rights based on the EU’s New Legislative Framework. Thus the AI Act is most applicable at the product deployment and use stages, while the GDPR is applicable to all stages from the onset of AI development where personal data is involved. The AI Act Art.27(4) allows the reuse of an existing DPIA to fulfil the obligations of FRIA. Further, the AI Act Art.27(2) allows deployers that are public institutions or entities acting on their behalf to reuse the initial FRIA or rely on an existing FRIA from a provider for certain categories of AI systems in Annex III. This implies that the FRIA may be developed in partnership between AI providers and deployers, as well as other entities who would have conducted a DPIA. With this, we have a mechanism where DPIA’s conducted at the start or in the early stages of the product development lifecycle are shared downstream towards the later stages to culminate in a FRIA at product deployment and operation. Through this argument, we highlight that the determination of high-risk under the AI Act is an obligation that has consequences on the AI value chain beyond the immediate entity responsible for its implementation as it feeds requirements related to risk assessment upstream to AI developers and other actors who are likely to have or support the downstream actors through their existing DPIA or by sharing the relevant risk assessment information necessary to conduct a DPIA.

Based on this, cooperation should be sought in sharing FRIA, DPIA, and other risk assessment information through formalised model clauses, such as those for AI public procurement contracts regarding high-risk AI which were published by the Commission in October 2023 [54]. These model clauses include the requirements for providers to document their risk management system but do not indicate that this should be linked to any specific requirement from the deployer arising from its initial FRIA or the option to offer an FRIA or DPIA as part of the risk assessment - though this document predated the final version of the Act which confirmed the FRIA requirement. Given that the Commission, specifically the AI Office as per Recital 143 and Art.63(3d), is charged with evaluating and promoting best practice in this area as a measure to support SMEs and start-ups, we suggest the development of appropriate guidelines that encourage sharing of DPIA and FRIA information across the product development lifecycle.

Such an approach would be appropriate for challenges in AI risk management where various stages of the AI system development can be outsourced, triggering implications for the subsequent stages. The entity responsible for each stage would need to be fully informed of all decisions, responsibilities, risk mitigation strategies, errors, corrections, intentions and unforeseen issues that may have arisen across the lifecycle in the same way as if they would be fully informed had they completed the previous stages themselves. Without this understanding, entities are not equipped to make fully informed decisions when creating systems, which results in a lack of trust and transparency. Similarly, upstream developers and providers also benefit from receiving such information from entities using the developed products and services by way of providing an understanding of practical risks and impacts which can be addressed at earlier stages in the lifecycle [55].

Regulatory sandboxes can offer a suitable medium for assessing such flows of information arising from FRIA and DPIA obligations and lead to compliance efficiency based on information interoperability. This can be implemented alongside Art.27(5) of the AI Act, which requires the Commission’s AI Office to develop a template for a questionnaire that uses automation to facilitate the implementation of FRIA obligations. Based on the existing work discussed earlier in Section 2 and the similarities between DPIA and FRIA information requirements as well as their respective high-risk determination conditions, the existing body of work regarding DPIA can be reused to facilitate FRIA support systems and its effectiveness can be evaluated in a specific member state under the existing GDPR regulatory sandbox mechanisms.

However, the variance in member states’ DPIA required lists creates ambiguities in how this would play out in practice. For example, if different entities are situated in different member states, the information they would retain regarding their DPIAs would be quite different from one another, which would lead to fragmentation of information being shared across the value chain and its use in FRIA. To address this, the DPIA required conditions across EU member states should be harmonised, and in particular where AI would be involved, aligned with the high-risk areas defined in the AI Act’s Annex III.

Conclusion

Our analysis of processing activities requiring a DPIA was based on the analysis of all authoritative sources - namely, the text of GDPR, guidance by EDPB, and processing lists published by 30 EU/EEA Member States. Through this analysis, we showed how and where substantial variations exist for requiring a DPIA across the EU/EEA based on 95 distinct conditions.

By using the DPIA analysis, we showed that DPIAs, as required by GDPR, are always necessary in 23 out of the 25 subclauses of Annex III AI Act. By considering reasonably foreseeable use cases, a DPIA is necessary in all 25 subclauses if certain conditions are met - specifically if the system produces legal effects, utilises special categories of data or vulnerable individuals’ data, or data on criminal offences. Therefore, we conclude that there is a significant overlap between the high-risk categorisation under Annex III of the AI Act and the GDPR’s DPIA requirements. We highlight that despite the limited necessity of FRIA to only some of Annex III use cases, a DPIA would still be required according to GDPR and that the scope of the DPIA includes the rights and freedoms covered under a FRIA. Based on the variations in DPIA requirements across EU /EEA Member States, we also explored the implications of the implementation of the AI Act’s Annex III clauses in terms of a DPIA being required in some countries but not in others. We explored such implications specifically in the context of similarities between FRIA and DPIA and how the two would influence information sharing across the AI value chain. Our findings indicate that effective information sharing can significantly assist entities in their internal practices, such as optimising resource allocation, and externally by enhancing overall ethical AI practices. Given the AI Act’s explicit acknowledgement of reusing DPIAs in the FRIA process, our work shows that such uses would depend on the country involved for the variations in DPIA requirements and also require the AI value chain actors to share their DPIAs at earlier states to enable FRIA at later stages.

Our work is important for the enforcement of both GDPR and the AI Act. For GDPR, the variations in the requirements for DPIA should be of interest to the EDPB to ensure harmonised interpretation and application of GDPR requirements. Similarly, each of the 30 EU/EEA Data Protection Authorities can also use our analysis to update their processing lists to align and address the AI Act’s Annex III high-risk applications. For the AI Act’s AI office, our analysis provides the crucial demonstration of variations across the EU regarding requirements for DPIAs and how closely integrated the application of GDPR is within the AI Act’s high-risk systems. Through this, we make the argument for close cooperation between the AI Act and GDPR enforcers. Our work also has significance in developing guidance for the fundamental rights impact assessments required in the AI Act based on utilising our analysis that maps DPIA requirements to Annex III clauses and also using it in the automated tools for supporting FRIA based on similar existing works for DPIA.

Our future work involves conducting a similar analysis of the AI systems that are listed in Article 5 of the AI Act detailing prohibited systems which pose a substantial risk to the rights and freedoms of individuals. Analysing how personal data is involved in these systems and whether these risks are accounted for in the framework of GDPR will help inform enforcement actions and regulatory interventions as well as push for appropriate measures to prevent harm to data subjects specifically. More crucially, it is important to investigate whether the development of prohibited systems under the AI Act has corresponding high-risk status at earlier stages which are not subject to the AI Act but can be regulated under the GDPR’s DPIA obligations. Similarly, based on the work described in this article, we also plan to explore how risk information can be shared across the AI value chain by utilising DPIAs and FRIAs.

Analysis of DPIA requirements in EU/EEA Member States

Table for ISO 3166-2 country codes for EU/EEA Member States

| Country | ISO 3166-2 code |

|---|---|

| Austria | AT |

| Croatia | HR |

| Denmark | DK |

| France | FR |

| Hungary | HU |

| Italy | IT |

| Lithuania | LT |

| Netherlands | NL |

| Portugal | PT |

| Slovenia | SI |

| Belgium | BE |

| Cyprus | CY |

| Estonia | EE |

| Germany | DE |

| Iceland | IS |

| Latvia | LV |

| Luxembourg | LU |

| Norway | NO |

| Romania | RO |

| Spain | ES |

| Bulgaria | BG |

| Czech Republic | CZ |

| Finland | FI |

| Greece | GR |

| Ireland | IE |

| Lichtenstein | LI |

| Malta | MT |

| Poland | PL |

| Slovakia | SK |

| Sweden | SE |

European Data Protection Board and the EU member states and European Economic Area countries (in alphabetical order).

Processing Activities requiring a DPIA Table lists all the 94 identified processing activities considered to be high-risk by GDPR, EDPB and the EU member states and EEA countries (in alphabetical order.)

| ID | DPIA Condition | Member States |

|---|---|---|

| C1 | Large Scale processing of Special category personal data (Art.35-3b) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C2 | Processing of Special Category of personal data for decision-making (Art.35-3b) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C3 | Large scale purposes (Recital 91) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C4 | Profiling and/or processing of vulnerable persons data (Art.35-3b) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C5 | Large scale Systematic monitoring of a publicly accessible area (Art.35-3c) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C6 | Processing resulting in legal effects (Art.35-3a) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C7 | (Large scale) profiling (Art.35-3a) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C8 | Automated decision making and/or automated processing with legal or similar effect (Art.35-3a) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C9 | Use of new technology or innovative use (Art.35-1) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C10 | Large scale Processing of personal data relating to criminal offences or unlawful or bad conduct (Art.35-3b) | GDPR, EDPB, AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LT, LU, MT, NL, NO, PL, PT, RO, SK, SI, ES, SE |

| C11 | Processing of Biometric data | AT, BE, BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, LV, LI, LT, LU, MT, NL, NO, PL, PT, SK, SI, ES |

| C12 | Processing of Genetic data | BG, HR, CY, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, LV, LI, LT, LU, MT, NL, NO, PL, PT, SK, SI, ES |

| C13 | (Large-scale) Processing of communication and/or location data | AT, BE, BG, HR, CY, CZ, EE, FI, FR, DE, GR, HU, IE, LV, LT, LU, NL, NO, PL, PT, RO, SK, SI |

| C14 | Evaluation or scoring of individuals (including profiling or predicting) | EDPB, CY, DK, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LU, NO, PL, PT, RO, SK, ES, SE |

| C15 | Matching or Combining separate data sets/ registers | EDPB, AT, CY, CZ, DK, FI, DE, GR, HU, IE, IT, LV, LI, LU, NO, PL, SI, ES, SE |

| C16 | (Large scale) processing of employee activities | HR, CY, CZ, EE, FR, DE, GR, HU, IS, LV, LI, LT, LU, MT, NL, NO, PL, SK, SI |

| C17 | Processing resulting in Access to or exclusion of services | BE, HR, GR, IS, IT, LV, LI, NO, PL, SI, ES, SE |

| C18 | Processing of data generated by devices connected to the Internet of things | HR, CY, GR, HU, IS, IT, NL, NO, PL, PT, RO, SI |

| C19 | Processing / Preventing a data subject from exercising a right | EDPB, CY, DE, GR, IT, LV, LI, LU, PL, SI, ES |

| C20 | Profiling resulting in exclusion/suspension/rupture from a contract | BE, HR, CY, FR, GR, IS, IT, LI, SI, ES, SE |

| C21 | Processing data concerning asylum seekers | AT, CY, IE, IT, LV, MT, SI, SE |

| C22 | Processing of data revealing political opinions | CY, CZ, FI, IT, LV, PL, RO |

| C23 | The purpose of data processing is the application of ‘smart meters’ set up by public utilities providers (the monitoring of consumption customs). | BE, HR, GR, HU, NL, PL, RO |

| C24 | Combining and/or matching data sets from two or more processing operations carried out for different purposes and/or by different controllers in the context of data processing that goes beyond the processing normally expected by a data subject, (provided that the use of algorithms can make decisions that significantly affect the data subject.) | AT, CZ, GR, IE, IT, LU, PL |

| C25 | Processing operations aimed at monitoring, monitoring or controlling data subjects, in particular where the purpose is to influence them by profiling and automated decision-making. | BE, CY, DE, ES, FR, GR, HU, IT, NL, PT |

| C26 | Processing of data for research purposes | AT, BE, CY, DE, FR, HU, IT, LU, MT, PT |

| C27 | Processing data of children, including consent in relation to information society services | BE, CY, CZ, DE, FR, HU, IT, LV, PL, SE |

| C28 | processing concerns personal data that has not been obtained from the data subject, and providing this information would prove difficult/ impossible | CZ, GR, IS, LV, LT, PT |

| C29 | (credit score) The purpose of data processing is to assess the creditability of the data subject by way of evaluating personal data in large scale or systematically | CY, FR, HU, NL, SK, SI |

| C30 | Processing of personal data for scientific or historical purposes where it is carried out without the data subject consents and together with at least one of the Criteria; | LV, LT, LU, NO, PT, SK |

| C31 | Processing concerned with evaluating indivduals for various insurance purposes | FR, GR, IT, PL, SI |

| C32 | Use of AI in processing | AT, CZ, DK, DE, GR |

| C33 | Large scale processing in the context of fraud prevention | CY, EE, FR, HU, NL |

| C34 | Large scale processing of financial data | CY, CZ, EE, NL, PL |

| C35 | The processing of children’s personal data for profiling or automated decision-making purposes or for marketing purposes, or for direct offering of services intended for them; | HR, HU, IS, LT, RO |

| C36 | Use of facial recognition technology as part of the monitoring of a public–accessible area | CY, DK, IT, PL, RO |

| C37 | The use of new technologies or technological solutions for the processing of personal data or with the possibility of processing personal data to analyse or predict the economic situation, health, personal preferences or interests, reliability or behaviour, location or movements of natural persons; | HR, DE, HU, IS |

| C38 | The processing of personal data by linking, comparing or verifying matches from multiple sources | HR, DE, LI, SI |

| C39 | Large scale systematic processing of personal data concerning health and public health for public interest purposes as is the introduction and use of electronic prescription systems and the introduction and use of electronic health records or electronic health cards. | FR, GR, HU, NL |

| C40 | Large scale data collection from third parties | GR, HU, IS, NO |

| C41 | Processing of data used to assess the behaviour and other personal aspects of natural persons | AT, BE, HR, DE |

| C42 | Health data collected automatically by implantable medical device | BE, CY, IS, PT |

| C43 | Processing operations aimed at monitoring, monitoring or controlling data subjects, in particular Roads with public transport which can be used by everyone | AT, CY, FR, PL |

| C44 | The processing of considerable amounts of personal data for law enforcement purposes. | HU, LI, NL |

| C45 | Processing of personal data for the purpose of systematic assessment of skills, competences, outcomes of tests, mental health or development. (Sensitive personal data or other information reveals a sensitive nature and systematic monitoring). | IS, NO, PL |

| C46 | Extensive processing of sensitive personal data for the purpose of developing algorithms | IS, IE, NO |

| C47 | Extensive processing of data subject to social, professional or special official secrecy, even if it is not data pursuant to Article 9(1) and (10 GDPR) | EE, DE, LI |

| C48 | Processing that involves an assessment or classification of natural persons | AT, HU, PL |

| C49 | Use of a video recording system for monitoring road behaviour on motorways. The controller intends to use a smart video analysis system to isolate vehicles and automatically recognise their plates. | CY, PL, SI |

| C50 | Electronic monitoring at a school or preschool during the school/storage period. (Systematic and disadvantaged bodies). | IS, NO |

| C51 | The purpose of data processing is to assess the solvency of the data subject by way of evaluating personal data in large scale or systematically | HU, SK |

| C52 | where personal data are collected from third parties in order to be subsequently taken into account in the decision to refuse or terminate a specific service contract with a natural person; | BE, HR |

| C53 | Processing of personal data of children in direct offering of information society services. | BG, LV |

| C54 | Migration of data from existing to new technologies where this is related to large-scale data processing. | BG, DK |

| C55 | (Systematic) transfer of special data categories between controllers | BE, NL |

| C56 | Large-scale processing of behavioural data | BE, FR |

| C57 | Supervision of the data subject, which is carried out in the following cases: a. if it is carried out on a large scale; b. if it is carried out at the workplace; c. if it applies to specially protected data subjects (e.g. health care, social care, prison, prison, educational institution, workplace). | LV, NL |

| C58 | Large-scale tracking of data subjects | LV, LI |

| C59 | Large-scale processing of health data | NL, PL |

| C60 | Processing of personal data where data subjects have limited abilities to enforce their rights | CZ, SI |

| C61 | Processing personal data with the purpose of providing services or developing products for commercial use that involve predicting working capacity, economic status, health, personal preferences or interests, trustworthiness, behavior, location or route (Sensitive data or data of highly personal nature and evaluation/scoring) | EE, NO |

| C62 | Innovate sensor or mobile based, centralised data collection | DE, SI |

| C63 | Collection of public data in media social networking for profiling. | CY, PL |

| C64 | Processing operations including capturing locations which may be entered by anyone due to a contractual obligation | AT |

| C65 | Processing operations including recording places which may be entered by anyone on the basis of the public interest; | AT |

| C66 | Processing operations including image processing using mobile cameras for the purpose of preventing or preventing dangerous attacks or criminal connections in public and non-public spaces; | AT |

| C67 | Processing operations including image and acoustic processing for the preventive protection of persons or property on private residential properties not exclusively used by the person responsible and by all persons living in the common household | AT |

| C68 | Processing operations including monitoring of churches, houses of prayer, as far as they are not already covered by lit. b and lit. e, and other institutions that serve the practice of religion in the community. | AT |

| C69 | Camera Surveillance | NL |

| C70 | Camera surveillance in schools or kindergartens during opening hours. | NO |

| C71 | Processing operations carried out pursuant to Article 14 of the General Data Protection Regulation. If the information that should be provided to the data subject is subject to an exception under Art.14 | SK |

| C72 | Processing of presonal data with a link to other controllers or processors | CZ |

| C73 | Large scale systematic processing of data of high significance or of a highly personal nature | GR |

| C74 | The use of the personal data of pupils and students for assessment. | HU |

| C75 | Processing operations establishing profiles of natural persons for human resources management purposes | FR |

| C76 | Processing of health data implemented by health institutions or social medical institutions for the care of persons. | FR |

| C77 | Investigation of applications and management of social housing | FR |

| C78 | Treatments for the purpose of social or medico-social support for persons | FR |

| C79 | File processing operations that may contain personal data of the entire national population, | LU |

| C80 | Insufficient protection against unauthorised reversal of pseudonymisation. | IE |

| C81 | When the data controller is planning to set up an application, tool, or platform for use by an entire sector to process also special categories of personal data. | HU |

| C82 | Large scale systematic processing of personal data with the purpose of introducing, organizing, providing and monitoring the use of electronic government services | GR |

| C83 | Processing of location data for the execution of decisions in the area of judicial¬enforcement | DK |

| C84 | Processing of personal data in the context of the use of digital twins | DK |

| C85 | Processing of personal data using neurotechnology | DK |

| C86 | The processing of personal data using devices and technologies where the incident may endanger the health of an individual or more persons | HR |

| C87 | where large-scale data is collected from third parties in order to analyse or predict the economic situation, health, personal preferences or interests, reliability or behaviour, location or displacement of natural persons | BE |

| C88 | Processing of personal data carried out by a controller with a main establishment outside the EU | BG |

| C89 | Regular and systematic processing where the provision of information under Article 19 of Regulation (EU) 2016/679 | BG |

| C90 | Processing operations in the personal area of persons, even if the processing is based on consent. | AT |

| C91 | (Large-scale) Managing alerts and social and health reports or professional reports e.g. COVID-19 | FR |

| C92 | Processing using data from external sources | FR |

| C93 | Anonymisation of personal data | DE |

| C94 | Acquiring personal data where source is unknown | IE |

Identifying information for determining DPIA requirement in AI Act’s Annex III

The following list expands on the GDPR concepts found in Annex III (Section 4.2-4.5 of this paper). Clauses with number/letter i.e. 1a, 2a, 3a, are the 25 clauses and subclauses found in Annex III. Clauses with identifiers of number/letter.numer. i.e. 2a.1, 3b.1 are the use cases we identified in our analysis that involve personal data and require conducting a DPIA by GDPR or individual member states.

Annex III 1a. Biometrics: An AI system intended to be used for the identification of people. MAY produce legal effects on subjects, has the processing context of utilising remote processing*, and involves special category personal data, specifically biometrics*. A DPIA is required under GDPR and EDPB guidelines due to the involvement of special category personal data, and in addition by member states: AT, BE, BG, HR, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, LV, LI, LT, LU, MT, NL, NO, PL, PT, SK, SI, ES (due to involvement of Biometric data).

Annex III 1b. Biometrics: An AI system intended to be used for the identification of people. MAY produce legal effects on subjects, processing context includes inferred data* and profiling (categorization), involves special categories of personal data, specifically biometrics, and data related to protected attributes or characteristics*. A DPIA is required under GDPR and EDPB guidelines due to the involvement of special category personal data, and in addition by member states: AT, BE, BG, HR, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, LV, LI, LT, LU, MT, NL, NO, PL, PT, SK, SI, ES (due to involvement of Biometric data).

Annex III 1c. Biometrics: An AI system intended to be used for the identification of people. MAY produce legal effects on subjects, processing context involves remote processing*, involves special categories of personal data, specifically biometrics*. A DPIA is required under GDPR and EDPB guidelines due to the involvement of special category personal data, and in addition by member states: AT, BE, BG, HR, CZ, DK, EE, FI, FR, DE, GR, HU, IS, IE, LV, LI, LT, LU, MT, NL, NO, PL, PT, SK, SI, ES (due to involvement of Biometric data).

Annex III 2a. Critical Infrastructure: An AI system intended to be used for controlling the safety of critical digital infrastructure/road traffic/supply of water/gas/heating/electricity, which MAY produce legal effects on the subject. A DPIA is NOT required under GDPR and EDPB guidelines, but is required by member states: AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 2a.1. A use case for the above Annex III clause 2a involves controlling the safety of critical digital infrastructure/road traffic/supply of water/gas/heating/electricity, by using smart meters to monitor individual household consumption and large-scale processing of personal data. This may produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines and in addition by member states: HU, PL, RO (Because processing activities include using smart meters), AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 2a.2. A use case for the above Annex III clause 2a involves controlling the safety of critical digital infrastructure/road traffic/supply of water/gas/heating/electricity, specifically by using road traffic analysis and large-scale processing of personal data. This may produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: AT, CY (Because processing activities include road traffic analysis), AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 2a.3. A use case for the above Annex III clause 2a involves controlling the safety of critical digital infrastructure/road traffic/supply of water/gas/heating/electricity, by monitoring of public transport using large-scale processing of personal data. This may produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: AT, CZ, DK, DE, GR (Due to the use of AI in processing), RO, SK (Because processing activities include monitoring of public transport).

Annex III 3a. Education and Vocational Training: An AI system intended to be used for determining access/admission or assigning persons utilising processing activities such as automated decision-making and profiling. This will produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 3a.1. A use case for the above Annex III clause 3a involves determining access/admission or assigning persons, which utilises processing activities such as assessing or classifying natural persons, and systematic assessment of skills/competences/outcomes of tests/mental health/development. This will produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: AT, DE, LV, ES (Because of the use of the processing activity: Assessing or classifying of people), AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 3a.2.A use case for the above Annex III clause 3a involves determining access/admission or assigning persons, which utilising processing activities such as automated decision-making, profiling, assessing or classifying natural persons, and systematic assessment of skills/competences/outcomes of tests/mental health/development. This will produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: HU, NL (student assessment), IE, IT, LT, MT, AT (Minors/vulnerable), AT, CY, CZ, DE, ES, FI, FR, HU, IE, IT, LV, LI, LT, MT, NO, PT, RO, SK, SI, SE (Because of the use of Vulnerable data subject data) AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 3b. Education and Vocational Training: An AI system intended to be used for evaluating learning outcomes, by utilising processing activities such as evaluation or scoring, systematic assessment of skills/competences/outcomes of tests/mental health/development. This will not produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: IS, NO, PL (Because of the use of the processing activity: assessment), CY, DK, FI, FR, DE, GR, HU, IS, IE, IT, LV, LI, LU, NO, PL, PT, RO, SK, ES, SE (Because of the use of the processing activity: evaluation or scoring), HU, NL (Due to the presence of student assessment processing activities), AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 3b.1. A use case for the above Annex III clause 3b involves evaluating learning outcomes, by utilising processing activities: evaluation or scoring, and systematic assessment of skills/competences/outcomes of tests/mental health/development. This will not produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: HU, NL (Due to the presence of student assessment processing activities), IE, IT, LT, MT, AT (Required because data specifically from minors is used), AT, CY, CZ, DE, ES, FI, FR, HU, IE, IT, LV, LI, LT, MT, NO, PT, RO, SK, SI, SE (Because of the use of Vulnerable data subject data), AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 3c. Education and Vocational Training: An AI system intended to be used for assessing appropriate level of education, and determining access, by use of processing activities such as automated decision-making, profiling, assessing or classifying natural persons, systematic assessment of skills/competences/outcomes of tests/mental health/development. This may produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: AT, CZ, DK, DE, GR (Due to the use of AI in processing).

Annex III 3c.1. A use case for the above Annex III clause 3c involves assessing the appropriate level of education and determining access, by use of processing activities such as automated decision-making, profiling, assessing or classifying natural persons, systematic assessment of skills/competences/outcomes of tests/mental health/development. This may produce legal effects on subjects. A DPIA is required under GDPR and EDPB guidelines, and in addition by member states: HU, NL ( (Due to the presence of student assessment activities), IE, IT, LT, MT, AT (Required because data specifically from minors is used), AT, CY, CZ, DE, ES, FI, FR, HU, IE, IT, LV, LI, LT, MT, NO, PT, RO, SK, SI, SE (Because of the use of Vulnerable data subject data).